|

By Olin Lathrop, Updated April 1999.

The name Virtual Worlds is being used by some in an attempt to be more realistic. Unfortunately this has not yet caught on in a major way. You are still much more likely to hear the term Virtual Reality, so that's what I'll use in this introduction to avoid confusing you with non-standard terms.

While there is no one definition of VR everyone will agree on, there is at least one common thread. VR goes beyond the flat monitor that you simply look at, and tries to immerse you in a three dimensional visual world. The things you see appear to be in the room with you, instead of stuck on a flat area like a monitor screen. As you might imagine, there are a number of techniques for acheiving this, each with its own tradeoff between degree of immersion, senses envolved beyond sight, computational requirements, physical contraints, cost, and others.

In stereoscopic 3D perception, we use the fact that we have two eyes that are set some distance apart from each other. When we look at a nearby object we measure the difference in angle from each eye to the object. Knowing this angle, the distance between the eyes, and a bit of trigonometry, we compute the distance to the object. Fortunately this is done for us sub-conciously by an extremely sophisticated image processor in our brain. We simply perceive the end result as objects at specific locations in the space around us.

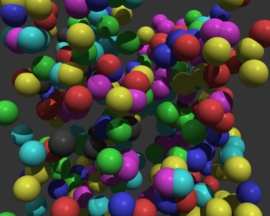

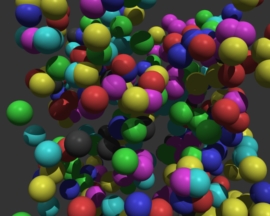

Figure 1 - A Stereo Pair of Images Cross your eyes so that the two images overlap as one image in the center. This may be easier if you move back from the page a bit. After a while, your eyes will lock and re-focus on the center image. When that happens, you will see the 3D layout of the spheres pop out at you. Don't feel bad if you can't see it. I've found that roughly 1/3 of the people can see it within a minute, another 1/3 can see it with practise, and the remaining 1/3 seem to have a hard time.

Figure 1 shows stereo viewing in action. When you cross your eyes as directed, your right eye sees the left image, and your left eye sees the right image. For those of you that can see it, the effect is quite pronounced, despite the relatively minor differences between the two images.

So, one way to make a 3D computer graphics display is to render two images from slightly different eye points, then present them separately to each eye. Once again there are several ways of achieving this.

This is exactly what shutter glasses are. They typically use electronic shutters made with liquid crystals. Another variation places the shutter over the monitor screen. Instead of blocking or unblocking the light, this shutter changes the light polarization between the left and right eye images. You can now wear passive polarizing glasses where the polarizer for each eye only lets thru the image that was polarized for that eye.

While this form of stereo viewing can provide good 3D preception and is suitable for many tasks, I don't personally consider this VR yet. The image or 3D scene is still "stuck" in the monitor. You are still looking at the picture in a box, instead of being in the scene with the objects you are viewing.

Figure 2 - Shutter Glasses and Controller CrystalsEyes product by StereoGraphics. This image was swiped from their web page.

I don't think of this by itself as VR yet either, although we're getting closer. You can have a reasonable field of view with great latitude in the placement of 3D objects, but the objects appear to move with your head. That's certainly not what happens when you turn your head in Real Reality.

Figure 3 - Head Mounted Display in Use The head mounted display is a Virtual Research VR4000. This image was swiped from their web page.

Now we've reached the minimum capabilities I'm willing to call VR.

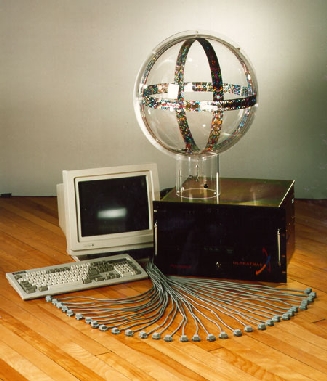

Figure 4 - Position and Orientation Sensors UltraTrak product by Polhemus. The position and orientation of each of the sensors on the table are reported to the computer in real time. The data is relative to the large ball on top of the unit, which contains three orthogonal magnetic coils. Individual sensors can be imbedded in a head mounted display, fixed to various body parts, or mounted on other object that move around. This image was swiped from the Polhemus web page.

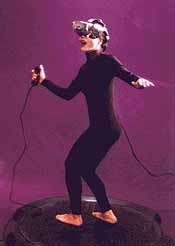

Hand and finger position and orientation is typically achieved by wearing a special glove that has a position sensor on it and can sense the angles of your finger joints. This can be taken a step further by wearing a whole suite with imbedded joint angle sensors.

Figure 5 - Glove that senses joint angles This is the CyberGlove by Virtual Technologies. It reports various joint angles back to the computer. This image was swiped from a Virtual Technologies web page.

Note that force feedback is currently limited to "pushing back" to simulate the existance of a object. It does not provide other parts of what we call tactile feel, like texture, temperature, etc.

Figure 6 - Haptic Feedback Glove No, this isn't some midieval torture device. It's the Cybergrasp product by Virtual Technologies. The cables and pulleys on the outside of the glove can be used to "push back" at the operator under computer control. This can be used, among other things, to allow the wearer to feel the presence of objects in the virtual world.

This concept was first developed in 1991 at the Electronic Visualization Lab of the University of Illinois at Chicago. Several other CAVE systems have since been set up at other sites.

Figure 7 - Virtual Reality Gaming An Ascension Technology SpacePad motion tracker is being used in conjunction with a head mounted display and hand buttons to implement a gaming station. This image was swiped from the Ascension Technology web page.

This could be useful and worth all the trouble in situations where you can't physically go, or you wouldn't be able to survive. Examples might be in hostile environments like high radiation areas in nuclear power plants, deep undersea, or in outer space. The robot also doesn't need to model a human exactly. It could be made larger and stronger to perform more physical labor than you could, or it might be smaller to fit into a pipe you couldn't. Remote robotics could also be a way to project a special expertise to a remote site quicker than bringing a person there. Some law enforcement and military applications also come to mind.

For example, the military is using VR to create virtual battles. Each soldier participates from his own point of view in an overall simulation that may envolve thousands of individuals. All participants need not be in physical proximity, only connected on a network.

This technology is still in its infancy, but evolving rapidly. I expect commercial applications of distributed collaboration to slowly gain momentum over the next several years.

Figure 8 - Simulated picture of VR used in visualization This picture tries to show us what the virtual world looks like to the two engineers. Some of this is wishful thinking, but it still does a good job of illustrating the concepts. Note that no finger position sensors or force feedback devices are apparent, even though the bottom engineer is selecting virtual menu buttons, and the other engineer seems to be resting his hand on the model. Also, anyone in the room not wearing a display would not be able to see the model at all. This image was swiped from the Virtual Research home page.

The standard 30 Herz update rate comes from existing systems intended for displaying moving images on a fixed monitor or other screen. With VR, you also have take into account how fast something needs to be updated as the user's head turns to present the illusion of a steady object. Apparently, that requires considerably more than 30 Herz for the full effect.

Links to Other VR Resources

|

Informatics Forum, 10 Crichton Street, Edinburgh, EH8 9AB, Scotland, UK

Tel: +44 131 651 5661, Fax: +44 131 651 1426, E-mail: school-office@inf.ed.ac.uk Please contact our webadmin with any comments or corrections. Logging and Cookies Unless explicitly stated otherwise, all material is copyright © The University of Edinburgh |