Accelerated Natural Language Processing

Tutorial 3: Grammar and parsing

The goal of this tutorial is to review and extend our exploration of (English) grammar, the meaning of parse trees and approaches to (context-free) parsing

When constructing a grammar, one important goal is to accurately reflect the meaning of sentences in the structure of the trees the grammar assigns to them. Assuming a compositional semantics, this means we would expect attachment ambiguity in the grammar to reflect alternative interpretations.

Here are some grammar rules which result in an attachment ambiguity. We used the phrases "hot tea and coffee" versus "empty bottles and fag-ends" to illustrate this.

The following two rules would allow us to get two different structures for those phrases:

Nom → Adj Nom

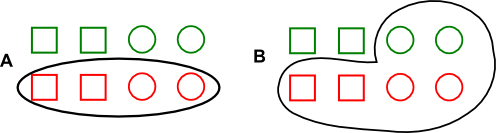

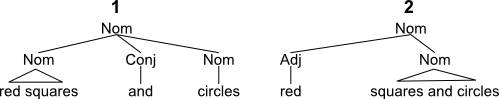

Nom → Nom Conj NomConsider the phrase "red squares and circles" and the following pictures and trees:

Question 1: Which tree goes with which interpretation? Why?

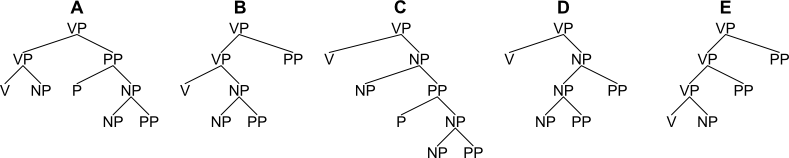

Here are some more grammar rules, which we've seen before. They result in attachment ambiguity for prepositional phrases:

VP → V NP

VP → VP PP

NP → NP PP

PP → P NPHere are five verb phrases:

And finally here are five partial trees:

Question 2: Match the phrases to the trees which best capture their meanings. You may find it helpful to ask yourself questions such as "where did this event happen?", "how was it done?", "what was watched?". You may also want to try out (in pencil!) different ways of writing in phrases under the leaves of the various trees, similarly to the trees given above for Question 1.

Ditransitive verbs such as give and lend take both a direct and an indirect object. In case-marked language, the indirect object is marked with a dative inflection. In English, there are two alternative forms: either the indirect object is marked with the preposition to and follows the direct object, as in I will lend my copy to whomever asks first, or it is unmarked and precedes the direct object, as in I will lend you my copy.

If we use a simple approach to capturing this in our grammar, by adding further rules as follows

VP → Vdi NP PP

VP → Vdi NP NPwe open the door to some further attachment ambiguities.

Question 3: Draw trees for the following sentences, at the same level of detail as for Question 2:

Broadly speaking, many languages have a small number of top-level sentence types:

Suppose we start our grammar with the following four rules:

S → Sdecl | Swhq | Synq | SimpQuestion 4: Suggest rules for each of the new sub-types of S just introduced. Start by writing some rules for VP which handle e.g. I can swim, he did swim and the duck may have flown. It may help to consider other sentences with words like can, will, shall, do and how these behave. Then, once you've got rules to handle declarative sentences, consider what rules you need to add (or modify) to get the other sentence types.

You don't need to worry about morphology or agreement, and you can assume a simple grammar for NP such as have appeared in the lectures. Try to be economical, that is, try to find more than one use for any new categories you introduce.

Declarative sentences may appear inside other sentences. Consider I told him that I had seen the comet and The farmer denied that he had killed the duckling.

Question 5: Suggest some further rules to capture these sentences. Bear in mind that I told him a story and The farmer denied the accusation are also sentences.

Question 6: What further possiblities for attachment ambiguity do your new rules introduce? Give some example sentences that illustrate the alternatives.

Both lectures and Jurfasky and Martin include worked examples of CKY parsing. Using the following Chomsky normal form grammar:

S → NP VP

VP → V NP

VP → VP PP

NP → D N

NP → NP PP

PP → P NP

V → swam | ran | flew

VP → swam | ran | flew

D → the | a | an

N → pilot | plane

NP → Edinburgh | Glasgow

P → toQuestion 7: Draw a 7x7 matrix for the sentence the pilot flew the plane to Glasgow and fill it in per the CKY algorithm. Number the symbols you put in the matrix in the order they would be computed, assuming the grammar is searched top-to-bottom.

Question 8: How is the attachment ambiguity present in this sentence reflected in the matrix at the end?