1. Words, and other words

A brief introduction to machine translation

Machine Translation covers a wide range of goals

- From FAHQUMT

- Fully Automatic High Quality Unrestricted MT

- To MAHT

- Machine-Assisted Human Translation

- FAHQUMT remains a dream: but hope springs eternal

- MAHT is big business, but not of much theoretical interest

The contrast between hyped-up promises of success and poor actual

performance led to

- The ALPAC report (1966)

- Found that many years of research had failed to meet expectations

- USA has no shortage of translators

- Fully automatic MT doesn’t really work, quality hasn’t improved much

- it isn’t clear if it will ever work

“The Committee indeed believes that it is wise to

press forward undaunted, in the name of science, but that the motive

for doing so cannot sensibly be any foreseeable improvement in

practical translation. Perhaps our attitude might be different if

there were some pressing need for machine translation, but we find

none.”

- The end of substantial funding for MT in the US for nearly

20 years

2. From knowledge-rich to machine-learning

MT has followed the same trajectory as many other aspects of speech and

language technology

- Historically, MT systems were based on one or more levels of linguistic analysis

- The largest and most heavily used MT system in the world worked like

this until very recently

- SYSTRAN, used by the EU to help with its translation load of over 2

million pages a year

- But most MT work today is based on one form or another of noisy channel decoding

- With language and channel models being learned from corpora

3. Before his time: Warren Weaver

Stimulated by the success of the codebreakers at Bletchley Park

(including Alan Turing), Weaver had an surprisingly prescient idea:

[...] knowing nothing official about, but having guessed and inferred

considerable about, powerful new mechanized methods in cryptography. . .one naturally wonders if

the problem of translation could conceivably be treated as a problem

in cryptography. When I look at an article in Russian, I say: “This

is really written in English, but it has been coded in some strange

symbols. I will now proceed to decode.”

Have you ever thought about this? As a linguist and expert on

computers, do you think it is worth thinking about?

from a letter from Warren Weaver to Norbert Wiener, dated April 30, 1947

4. A very noisy channel

Applying the noisy channel model to translation requires us to stand

normal terminology on its head

- Usually we talk about source and target languages

- For example, when translating Братья Карамазовы into English

- Russian is the source

- English is the target

But from the perspective of the noisy channel model

- The source is English

- The channel distorts this into what we see or hear, that is, Russian

- Which we have to decode

- to get to the source

- which is the target

- :-)

5. Priors and likelihood for MT

Remember the basic story (using e for English and

r for Russian):

The prior is just our old friend, some form of language model

But the channel model needs to be articulated a bit for translation, in several ways

- The source and target need not have the same number of words

- And the mapping part, on even a fairly simple view, has to do

two things:

- Not just translate the words

- But re-order them as well

So we need a channel model that takes all of these into account

6. Translation modeling for MT

J&M 2nd ed. Chapter 25 takes you through a step-by-step motivation for the

first successful attempt at doing things this way

- By the IBM team, working from French into English

- Using Canadian Hansard for training and testing

- And a variety of HMM-based decoding methods

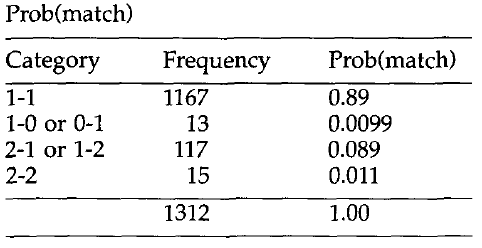

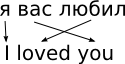

All their approaches start with a formal notion of alignment

- A (possibly one-to-many) mapping from source word position to

position in the observation sequence

- So, for our trivial Russian example, this would be

1 3 2 - Because the second and third words exchange positions between English

and Russian

7. Translation modelling, cont'd

Their simplest model then has three conceptual steps:

- Choose a length for the Russian, given the length of the English

- Remember, from the model's perspective we are generating the

Russian observation, starting from the English source

- Think of the POS-tagging HMM

- Which 'generates' English words (the observations) from a sequence

of POS tags (the source)

- Choose an alignment from the words in the source (English) to the

words in the observations (Russian)

- For each position in the Russian, choose a translation of the English

word which aligns to it

Following simplifying assumptions of the usual Markov nature, we end up

with

Where

- I and J are the (observed) length of the

English and the (hypothesised) length of the Russian, respectively

- is

the alignment of the jth Russian word

For the translation model before we do the Bayes rule switch

and the argmax

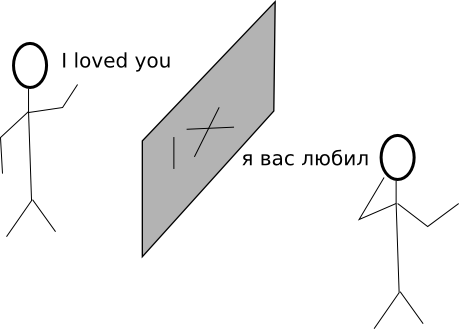

8. Contemporary MT

We've barely scratched the surface

But state-of-the-art MT systems today all derive from essentially this

starting point

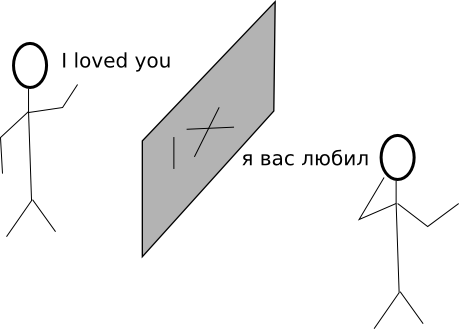

- Including Google Translate

- Which used to think that "я вас любил" should be translated as

"I loved you more"

- Of course it's clearer in the context of the whole Pushkin poem from

which the phrase is extracted

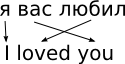

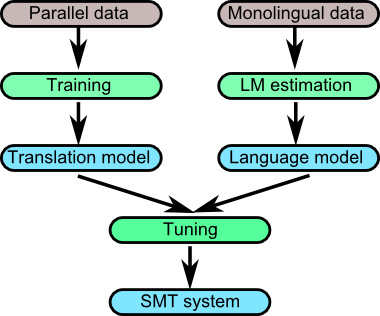

9. Getting started: The role of data

Broadly speaking, we have two models to learn:

- We've seen this already,

- Target

language data

- I.e. monolingual

- Lots of it

- Such as Google's Gigaword corpus

- For word-word alignment and word/phrase translation

- Bilingual

- Harder to get lots

10. Getting started: segmentation and sentence alignment

Just as with other corpora, we need to pre-process the raw materials

- Normalise markup

- Check for and correct character encoding problems

- Segment and normalise

- tokens

- morphemes?

- sentences

- paragraphs

- down-case at beginning of sentences, maybe

- tag

These will vary in difficulty given the form of the raw data

- And the language involved

But for the translation model, with respect to the bilingual data, we need more

- We need to align the two versions at the paragraph and sentence level

- Sentence level is not always 1-to-1

11. Sentence alignment details: Gale and Church (1993)

Assumptions:

- We start with two documents

- In source and target languages

- Translations of one another

- Sentence order is rarely if ever changed

- If paragraphs exist, they are already aligned

Paragraph by paragraph, the algorithm matches source sentences to zero, one or

two target sentences

- Sentence may be deleted by translator

- Sentence may be split into two by translator

- In either direction

- We don't actually always know which was the original

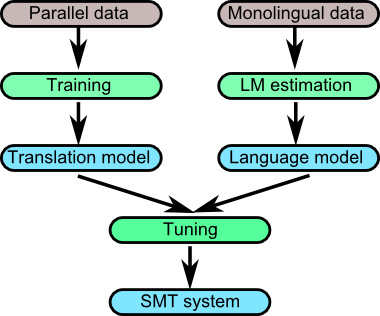

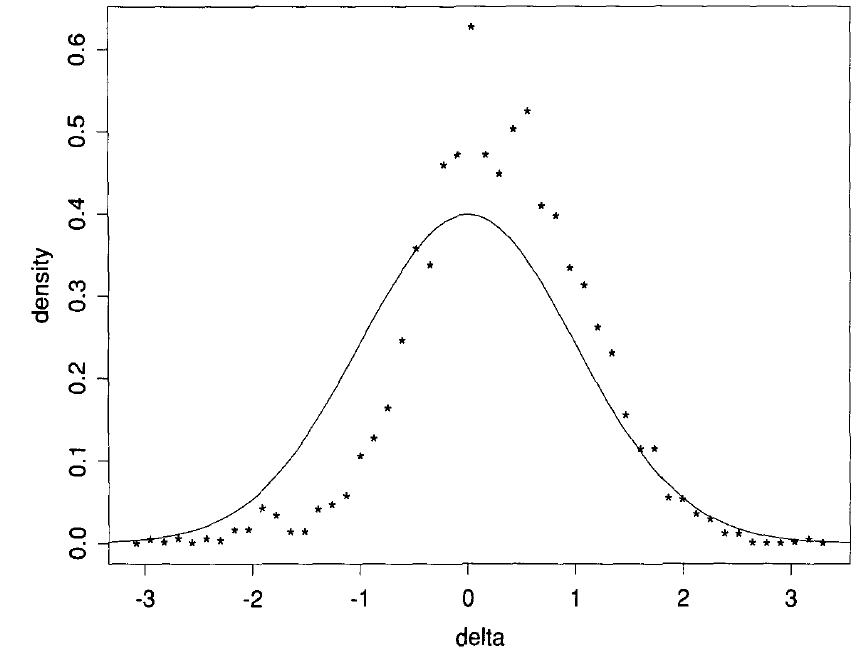

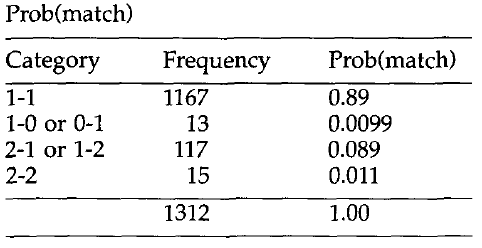

12. Gale and Church, cont'd

Start with some empirical observations:

What does a hand-aligned corpus tell us about sentence alignment?

- That gives G&C the basis for a maximum likelihood estimate of

- Where by match is meant a particular alignment choice

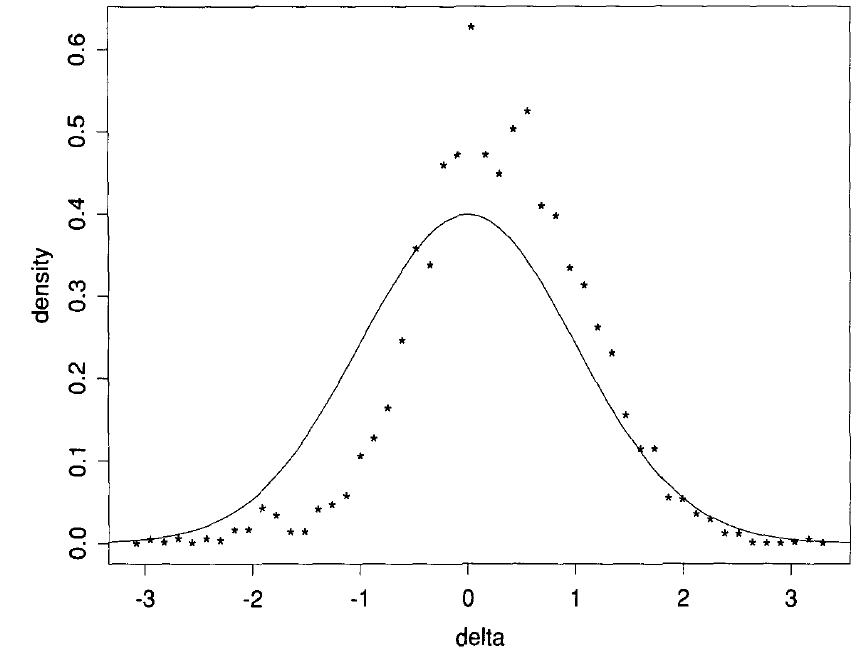

What about relative length?

- If we suppose lengths (in characters) are

normally distributed around equality

- With standard deviation estimated from the same hand-aligned corpus

- We get this picture when we plot the actual z-scored ratio

From this kind of data G&C get what they need to feed into a dynamic programming search

for the optimal combination of local alignments within a paragraph

- Source sentences on one edge

- Target along the the other

- Dynamic programming in this case is similar to spelling correction

- With costs coming from the formula above, drawing on six

possible 'moves'

- deletion, insertion, substitution

- two-for-one, one-for-two, two-for-two

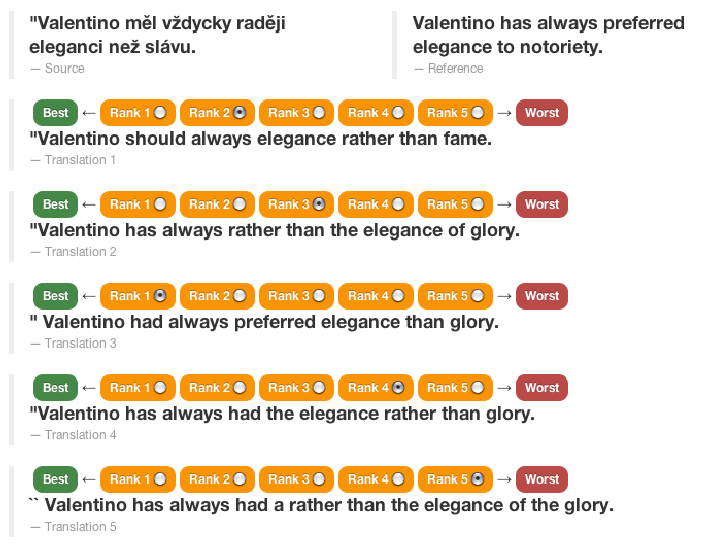

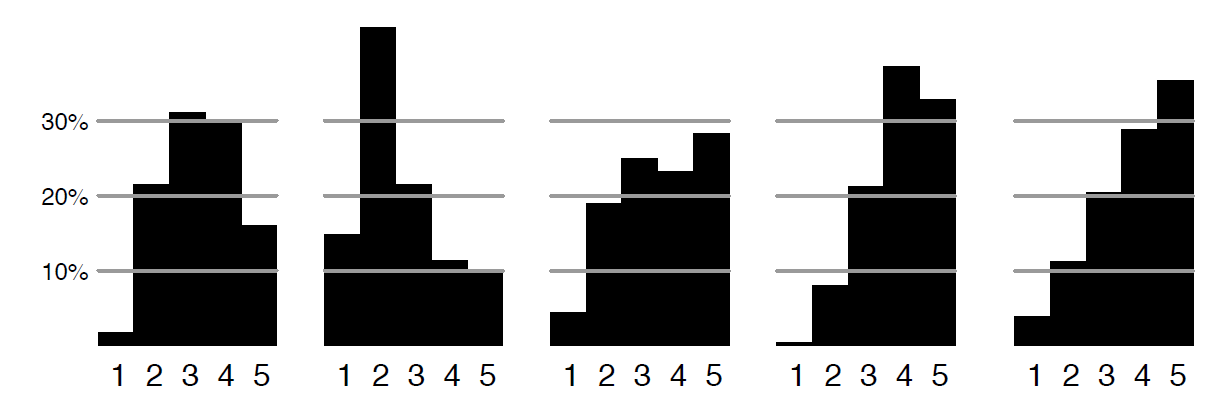

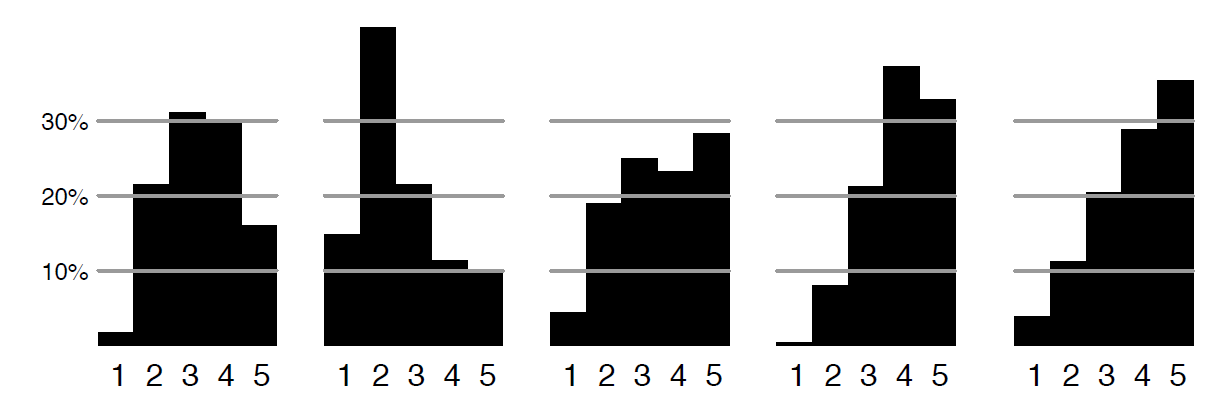

13. Evaluation-driven development

From 2006–2014, an annual competition was held

- The 'Workshop' on Statistical Machine Translation (WMT)

Shared task, many language pairs

- Participants given corpora with which to train their MT systems

- They get a test set to translate and submit

- Submissions are scored

- Participants write papers on how they built their systems for the conference

14. Evaluation

How can we evaluate systems?

As with other similar tasks, in one of two ways:

- Extrinsic evaluation

- Measure the utility of the

result with respect to some (real) use

- As first step in HQ production: how much post-editing required?

- Comprehension tests

- As basis for search or information retrieval: measure quality of

that result

- Intrinsic evaluation

-

Measure the quality of the result against some (more-or-less

explicit) standard

- Human quality assessment

- Automatic comparison to gold standard

Any measure involving humans is

- Slow

- Expensive

- Hard to ensure fairness

- Not stable

- Judges disagree with each other

- And with themselves (from one trial to the next)

Any automatic measure is

- Only as good as the gold standard it uses

- Misleading if based on a single translation: there is no one 'right' answer

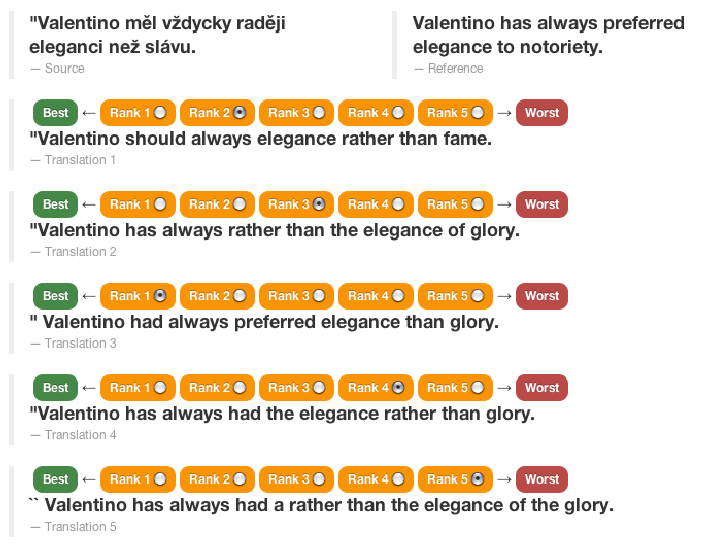

15. Human evaluation

One or more judges, working from

- MT system output

- Original

- Reference translation(s) (maybe)

Different dimensions for judgement

- How well is the meaning of the source preserved?

- How fluent is the result in the target language?

Typically judged on a numeric scale

- Which is misleading

- You can not treat the results as numbers

- That is, you can't compute mean, variance, etc.

As mentioned before, agreement can be a problem

16. Automatic evaluation

There was no accepted automatic evaluation measure for MT for a long time

- A problem given the evaluation-driven funding ideology

- Single-reference an obvious problem

The advent of the BLEU methodology (BiLingual Evaluation

Understudy) around 2000 helped a lot

- By Papieni and colleagues at IBM T. J. Watson Labs

It correlates surprisingly well with human judgements

- Although it's nowhere near perfect

- It's good enough at least for now

17. A digression about headroom

When you need numerical scores to facilitate hillclimbing

- It really matters how far you are from your goal

If your system is doing pretty well already

- You need a very accurate measure to reliably detect improvement

But if you're doing pretty badly

- A rough-and-ready measure will be just fine

So we can ask "How much headroom do we have?"

- MT has plenty of headroom

- Thus the remark above that BLEU is "good enough for now"

18. BLEU: overview

BLEU starts from the observation that just getting the 'right' words

counts for a lot

- But goes beyond that some way towards checking that they're in the

'right' order

- And allows for multiple reference translations

So BLEU counts not just word overlap

between candidate output sentence and reference translation(s)

Two parts to the evaluation of each sentence:

- Modified precision score for n-grams of size 1 to 4

- A penalty for short translations

- Essentially a recall measure

Combined over paragraphs or documents

19. A digression about precision and recall

To test the overlap between two sets

- Results for a document retrieval request vs. the 'correct' set

- Word boundaries hypothesised for a Chinese sentence vs. the ones actually there

- Words in a candidate translation vs. words present in the union of a

reference set of translations

We need to answer two questions

What proportion of the answers are right?

- That's precision

What proportion of the truth was found?

- That's recall

You need both

- A system which makes one and only one guess, and gets it right

- has

100% precision

- but very low recall

- And one which guesses the whole lexicon every time

- has 100% recall

- but very low precision

20. BLEU: Three versions of the formula

As described, the BLEU formula is a product

- Of the brevity penalty

- Where c is the number of words in the candidate

- And r is the number of words in the reference

- And the geometric mean of the modified ngram precisions

- where

The 4th root is usually expressed via the log domain

- As this allows non-linear weighting if desired

- Where is the weight per contribution of the different n-grams

- Usually a constant

The whole thing is usually then moved into the log domain

- For simplicity in presentation, as well as the usual practical reasons

See J&M 2nd ed.

25.9 for details and a worked example

21. Getting something to evaluate: Back to translation modelling

How do we learn even the simple model we sketched last time?

We saw how to align sentence (groups) between parallel corpora

- But how do we learn the components of the translation (channel) model?

- That is, the probabilities for the (word) alignment function and the

word-word translations

From the 20,000-foot level, this is similar to the HMM-learning problem

for tagging

- For which the answer was the forward-backward algorithm

That is, we start out with nothing

- That is, we initialise the translation model with either random, or uniform, estimates of probability for all

possible alignments and all possible translations

- Well, not all possible translations

- We'll assume that we have at least the beginnings of a bilingual lexicon

Then, very much as in the forward-backward algorithm

- We'll 'count' what happens with every sentence pair in our aligned corpus

- When we match them using our current model

And use those counts to re-estimate all the probabilities

22. Expectation maximisation

What's common to the approach just described and the forward-backward

algorithm is that they're both examples of expectation maximisation

- Adjusting a model repeatedly until what it sees most often

- Is what it 'expects' to see most often

How can this possibly work?

- Just as with forward-backward

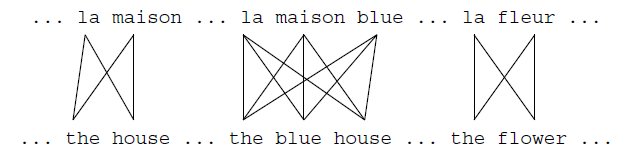

- The data will help

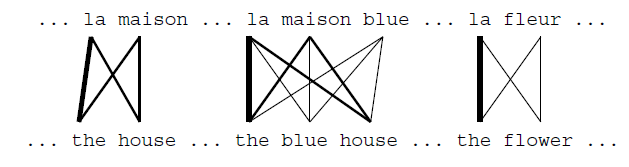

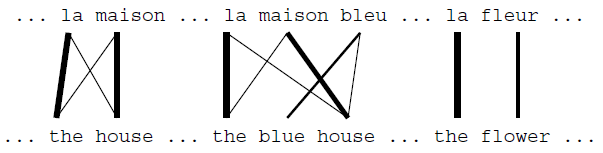

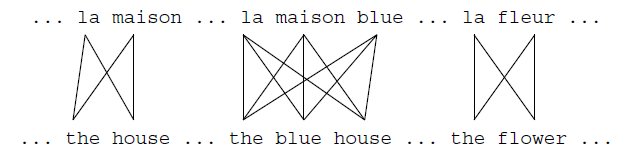

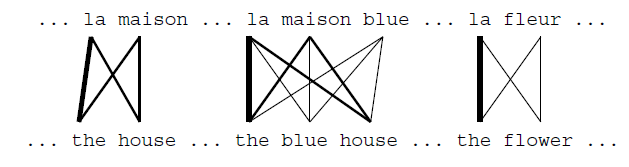

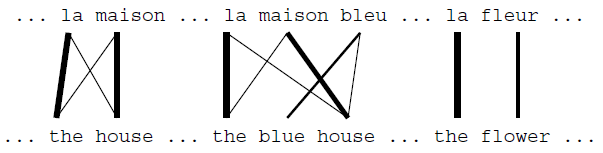

As we iterate over the data, we will 'count' la being

realised as the more often than anything else

- Giving us something like this

- And the effect of this helps with other things in turn

What happened with 'fleur' is called the pigeon hole principle

- There's nowhere else plausible for it to map to

23. But this is all changing

Over the last two years, there's been a huge shift in emphasis

- Away from the explicit noisy channel model architecture

- With multiple components

- Carefully trained, weighted and combined

- To deep neural nets

- Which may have several components

- But may not overtly distinguish the channel model from the language model

Google announced a few months ago that Google Translate had made such a shift for some of

the most common language pairs

24. Expectation maximisation, cont'd

By aggregating across all sentence pairs, we can count how much

probability attaches to e.g. the bleu/blue pair, compared to

all the bleu/... pairs, to get a new ML estimate

In the simplest IBM model, so-called IBM Model 1,

they started with the assumption that all alignments were equally likely

The overall shape of their EM process was as follows

- Step 1: Expectation

- For every sentence pair

- For every possible word alignment

- Use the word-word translation probabilities to assign a total probability

- Step 2: Maximisation

- Suppose the assigned values to be true

- Collect probability-weighted counts for all word translation pairs

- Re-estimate the probabilities for every pair

Iterate until convergence

- That is, go back to Step 1 and use the re-estimated word-word probabilities to re-estimate the alignment probabilities

In IBM's first efforts (IBM Model 1)

- training (and testing) on Canadian Hansard

- With no probabilities being learned for the individual word alignment mappings

This approach performed well enough to launch a revolution

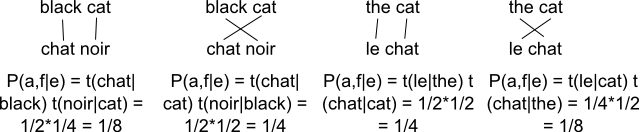

25. Expectation maximisation: simplified example

[Modelled on J&M section 25.6.1 (q.v.) (in turn based on "Knight, K.

(1999b). A statistical MT tutorial workbook. Manuscript prepared for the 1999

JHU Summer Workshop.")]

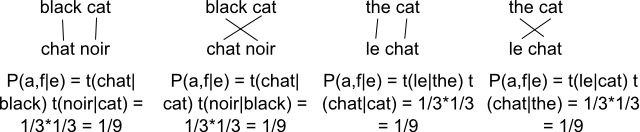

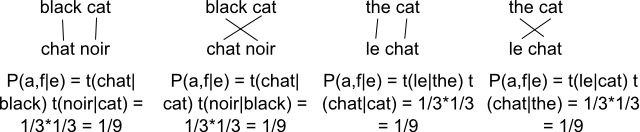

Assume one-to-one word alignments only

So we have

Where

- A is an alignment

- F is a foreign

sentence

- E is an English sentence

- J is the length

of the foreign sentence

- and the English, given our assumption

- t is a conditional word translation probability

And just two pairs of sentences

- "black cat":"chat noir"

- "the cat":"le chat"

Giving the following vocabularies:

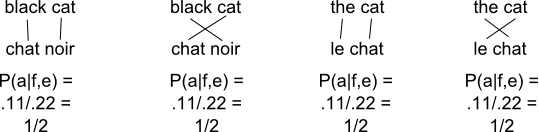

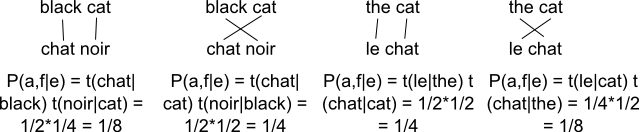

26. EM Example, cont'd

We start with uniform word translation probabilities

| t(chat|black)=1/3 | t(chat|cat)=1/3 | t(chat|the)=1/3 |

| t(le|black)=1/3 | t(le|cat)=1/3 | t(le|the)=1/3 |

| t(noir|black)=1/3 | t(noir|cat)=1/3 | t(noir|the)=1/3 |

Do the Expectation step: first compute the probability of each possible

alignment of each sentence:

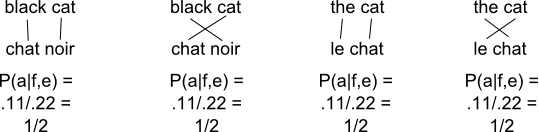

Normalise P(a,f|e) to get P(a|f,e) for each

pair by dividing

by the sum of all possible alignments for that pair

Finally sum the fractional 'counts' for each pair and each source

| tcount(chat|black)=1/2 | tcount(chat|cat)=1/2 + 1/2 | tcount(chat|the)=1/2 |

| tcount(le|black)=0 | tcount(le|cat)=1/2 | tcount(le|the)=1/2 |

| tcount(noir|black)=1/2 | tcount(noir|cat)=1/2 | tcount(noir|the)=0 |

| total(black)=1 | total(cat)=2 | total(the)=1 |

The maximisation step: normalise the counts to give ML estimates

| t(chat|black)=1/2 / 1 = 1/2 | t(chat|cat)=(1/2 +

1/2) / 2 = 1/2 | t(chat|the)=1/2 / 1 = 1/2 |

| t(le|black)=0 / 1 = 0 | t(le|cat)=1/2 / 2 =

1/4 | t(le|the)=1/2 / 1 = 1/2 |

| t(noir|black)=1/2 / 1 = 1/2 | t(noir|cat)=1/2 / 2 =

1/4 | t(noir|the)=0 / 1 = 0 |

All the correct mappings have increased, and some of the incorrect ones

have decreased!

Feeding the new probabilities back in, what we now see for each alignment is

And the right answers have pulled ahead.