Some general background on how we got here

Connections back to last year's Informatics 2A

Some administrative details

First closely parallel to, latterly increasingly separated from, the history of linguistic theory since 1960.

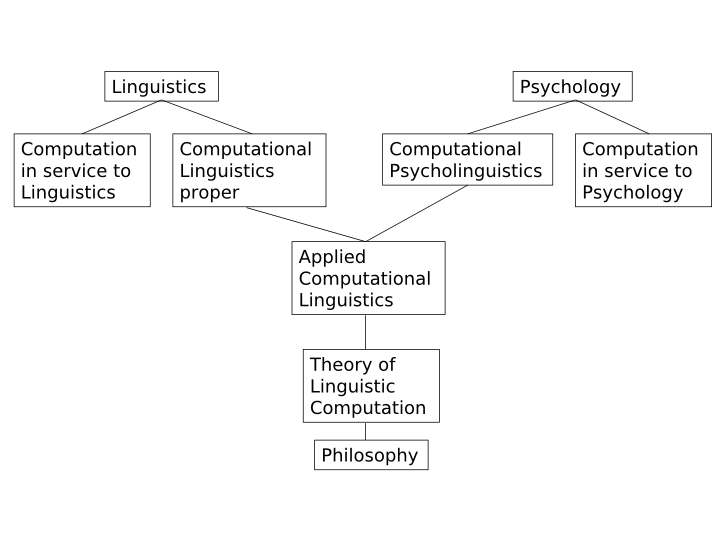

Situated in relation to the complex interactions between linguistics, psychology and computer science:

Originally all the computational strands except the 'in service to' ones were completely invested in the Chomskian rationalist inheritance.

Computational linguistics copied late 20th-century linguistics

So drew extensively on algebra, logic and set theory:

Then added parsing and 'reasoning' algorithms to grammars and logical models

Starting in the late 1970s, in the research community centred around the (D)ARPA-funded Speech Understanding Research effort, with its emphasis on evaluation and measurable progress, things began to change.

(D)ARPA funding significantly expanded the amount of digitised and transcribed speech data available to the research community

Instead of systems whose architecture and vocabulary were based on linguistic theory (in this case acoustic phonetics), new approaches based on statistical modelling and Bayesian probability emerged and quickly spread

Every time I fire a linguist my system's performance improves

Fred Jellinek, head of speech recognition at IBM, c. 1980 (allegedly)

Speech recognition, that is, at least the transcription, if not the understanding, of ordinary spoken language, is one of the major challenges facing Applied Computational Linguistics.

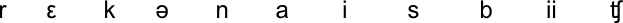

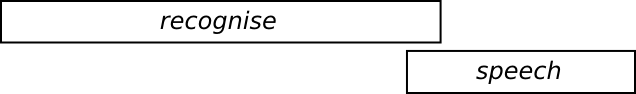

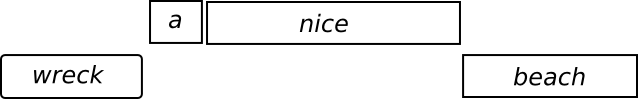

One of the reasons for this is masked by the fact that our perception of speech is hugely misleading: we hear distinct words, as if there were breaks between each one, but this is not actually the case at the level of the actual sound. For example here's a display of the sound corresponding to an eight-word phrase:

It's hard to credit the word boundary problem, at first

Here's another example, closer to home

Despite this inconvenient property of normal spoken language

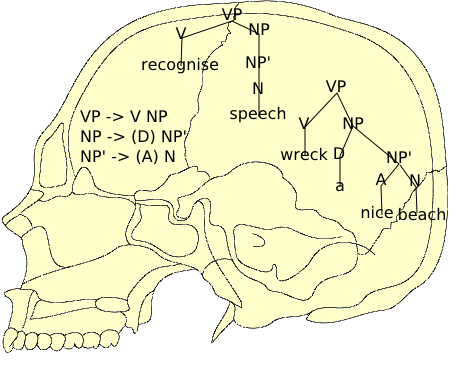

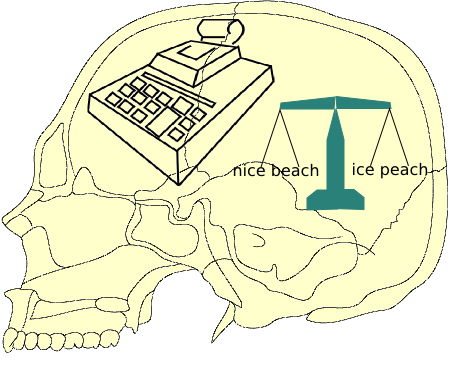

This is not just a matter of getting the word boundaries right or wrong. The next problem facing a speech recogniser, whether human or mechanical, is that the signal underdetermines the percept:

You heard:

But I said:

And there are more possibilities:

What's going on here? How do we do this?

Biology has gone down this road first and furthest

The immune system was an early and revolutionary example

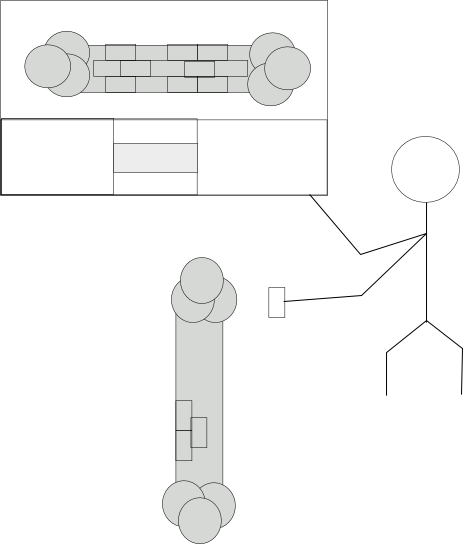

A simpler example (oversimplified here) is bone growth

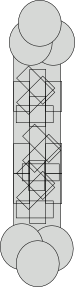

How do we get the required array of parallel lines of rectangular cells?

The naive instructional view is that there's somehow some kind of blueprint, which some agent (enter Hume's paradox) appeals to in laying down the cells:

The truth appears to be selective: cells appear in new bone with all possible orientations

But the ones that are not aligned with the main stress lines die away:

So how do we select the right path through the word lattice?

Is it on the basis of a small number of powerful things, like grammar rules and mappings from syntax trees to semantics?

Or a large number of very simple things, like word and bigram frequencies?

In practice, the probability-based approach performs much better than the rule-based approach

The publication of 6 years of digital originals of the Wall Street Journal in 1991 provided the basis for moving the Bayesian approach up the speech chain to morphology and syntax

Many other corpora have followed, not just for American English

And the Web itself now provides another huge jump in the scale of resources available

To the point where even semantics is at least to some extent on the probabilistic empiricist agenda

Whereas in the 1970s and 1980s there was real energy and optimism at the interface between computational and theoretical linguistics

While still using some of the terminology of linguistic theory

Within cognitive psychology, there is significant energy going in to erecting a theoretical stance consistent with at least some of the new empiricist perspective.

But the criticism voiced 25 years ago by Herb Clark, who described cognitive psychology as "a methodology in search of a theory", remains pretty accurate.

And within computer science in general, and Artificial Intelligence in particular, the interest in "probably nearly correct" solutions, as opposed to contructively true ones, is dominant.

Just as we do well to always prefer "cock up" to "conspiracy" when seeking answers to "Why did things go wrong" questions, so the old joke about the drunk and the lamppost is often . . . illuminating with respect to "Why are they doing that" questions

The bottom line is that aggregation, correlation and description are always easier than explanation

The huge growth in the availability of data of all kinds has inevitably distorted the balance of effort -- the pickings are easy on the frontier, after all.

But the hard problems remain, and in time, as the growth curves flatten out, we'll get back to them.

But for this course, this term, we will concentrate on getting familiar with the (largely empiricist) state of the art.

Take the techniques you learned about in Inf2A, and the reality of real corpus data, and see how to make the one apply to the other.

Explore how well both linguistic theory and computational methods stand up to the reality of language as we find it in bulk.

Ambiguity—What kinds are there? What techniques might you use to compensate?

What's edit distance? Dynamic programming?

What's the difference between a test set and a training set?

What's a POS? What is the difference between closed and open class?

What's a CF-PSG? How do you use one?

What's compositionality?

Course home page is at http://www.inf.ed.ac.uk/teaching/courses/fnlp/

Do your own work yourself

Lab sessions and assignment timings will be discussed Friday.

You really will need to have access to a copy of the text, and the 2nd edition at that.

Notices will come via the mailing list, so do register for the course ASAP even if you're not sure you will take it.