Introduction

Computer Science Large Practical

Organisational Matters

- Me: Allan Clark

- Email: a.d.clark@ed.ac.uk

- Website: http://www.inf.ed.ac.uk/teaching/courses/cslp/

- There is one lecture per week

- Fridays at 12:10-13:00

- At 7 Bristo Square - Map lecture theatre 4

- Coursework: Accounts for 100% of your mark for the course

- No required textbook

- No scheduled office hours, please email at any time

Restrictions

- CSLP is a third-year undergraduate course only available to third-year undergraduate students.

- CSLP is not available to visiting undergraduate students, or to fourth-year undergraduate students and MSc students, who have their own individual projects.

- Third-year undergraduate students should choose at most one

large practical, as allowed by their degree regulations.

- Computer Science, Software Engineering and Artificial Intelligence large practicals

- On most degrees a large practical is compulsory.

- On some degrees (typically combined Honours) you can do the System Design Project instead, or additionally.

- See the Degree Programme Tables (DPT) in the Degree Regulations and Programmes of Study (DRPS) for your degree for clarification.

The Computer Science Large Practical Requirement

- The requirement for the Computer Science Large Practical is to create a command-line application.

- The purpose of the application is to implement a

stochastic, discrete-event, discrete-state, continuous time simulator

- I'll explain these words further below

- This will simulate the progression of buses through a network of stops specified by the input

- The output will be the sequence of events that have been simulated as well as some summary statistics.

- The input and output formats are specified in the coursework handout together with several other requirements

- It is your responsibility to read the requirements carefully

Today's Lecture

- Today I will discuss:

- Context for the practical, timing and deadlines

- Motivation for the simulation of a bus network

- The simulation algorithm used

- The main requirements for the practical

- Kinds of simulators and in particular the kind that you are being asked to produce

Context

- So far most of your practicals have been small exercises

- Next year, you will undertake an honours project

- This practical represents something in between those

- It is larger and less rigidly defined than your previous course works

- It is more rigidly defined and smaller than your honours project

- The CSLP tries to prepare you for

- The System Design Project (in the second semester)

- The Individual Project (in fourth year).

Requirements

- The requirements are more realistic than most coursework

- But still a little contrived in order to allow for grading

- There is:

- a set of requirements (rather than a specification);

- a design element to the course; and

- more scope for creativity.

How much time should I spend?

- 100 hours, all in Semester 1, of which

- 8 hours lecture/demonstrating

- 92 hours practical work, of which

- 70 hours non-timetabled assessed assignments

- 22 hours private study/reading/other

How much time is that really?

- There are 13 weeks remaining in semester 1 (Weeks 2 to 14)

- 7 * 13 = 91 hours

- So you can think of it as 7 hours per week in the first semester

- This could be one hour a day including weekends

- You could work 7 hours in a single day

- for example work 9:00-17:00 with an hour for lunch

Managing your time

It is unlikely that you will want to arrange your work on your large practical as one day where you do nothing else, but one day per week all semester is the amount of work that you should do for the course.

Scheduling work

Course lecturers have been asked not to let deadlines overlap Weeks 11-14 because students are expected to be concentrating on their large practical in that time.Deadlines

The Computer Science Large Practical is split in two parts:- Part 1

- Deadline:Thursday 24th October, 2013 at 16:00

- Part 1 is zero-weighted: it is just for feedback.

- Part 2

- Deadline: Thursday 19th December, 2013 at 16:00

- Part 2 is worth 100% of the marks.

Scheduling work

- It is not necessary to keep working on the project right up to the deadline.

- For example, if you are travelling home for Christmas you might wish to submit the project early.

- In this case you need to ensure that you start the project early.

- The coursework submission is electronic so it is

possible to submit remotely.

- But you must make sure that your submission works as expected on DiCE

- This might be easier to do locally

- But see working remotely and in-beta remote graphical login

Early submission credit

- In order to motivate good project management, planning, and efficient software development, the CSLP reserves marks above 90% for work which is submitted early (specifically, one week before the deadline for Part 2).

- Work submitted less than a week before the deadline does not qualify as an early submission, and the mark for this work will be capped at 90%. Thus, the mark may be 90%, but it may not be higher than this.

- Regardless of when it is submitted, every submission is assessed in exactly the same way, but submissions which attract a mark of above 90% which were not submitted early have this mark brought down to 90%.

Early submission credit

- Question:

- Can I submit both an early submission version and a version for the end deadline and have the marks for whichever is highest?

- Answer:

- No. Before the early submission deadline you have to choose whether or not you are going to hope for a mark above 90% then, or have an extra week to accumulate more marks up to 90%. The submission marked will be the latest one made before the deadline. Hence if you submit both before and after the early submission deadline, only the last submission will be marked and it will be capped to 90%.

Extensions

- Do not ask me for an extension as I cannot grant them

- The correct place is the ITO who will pass this on to the year organiser (Vijay Nagrajan)

- Link to the policy on late coursework submission

Implementation Language

- You may choose whichever programming language you deem most suitable.

However:

- Your application should compile and run on DiCE

- Here is an obvious list of languages which should work on DiCE without any problems: C, C++, C#, Haskell, Java, Python, Objective-C, Ruby. However care should be taken with versions.

- If you wish to use something else it would probably be prudent to ask me first.

Implementation Language

- You may choose whichever programming language you deem most suitable.

However:

- Your application should compile and run on DiCE

- I am even open to installing a compiler and/or runtime on my DiCE installation but this is entirely at my discretion.

- It is up to you to choose a suitable language

- Your choice will not be judged, however if you choose poorly, this will not be reflected in more lenient marking.

- Whatever choice you make, you must live with

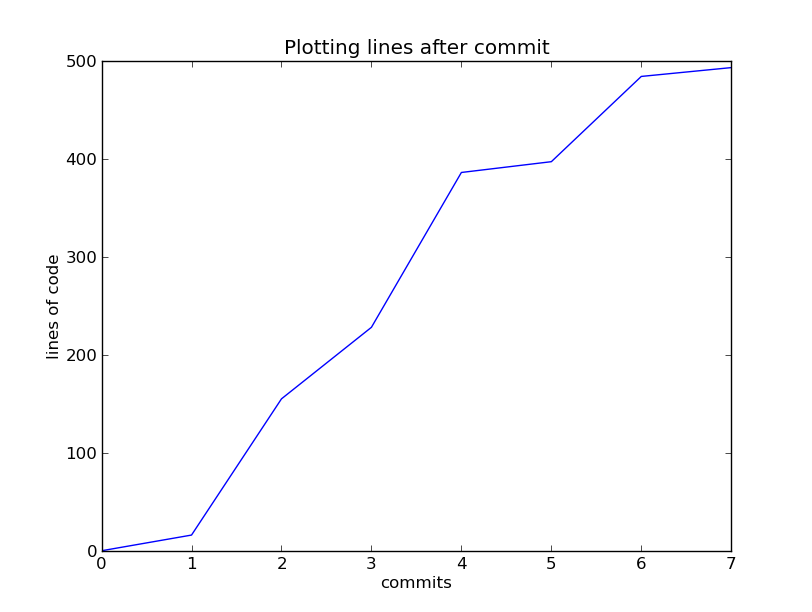

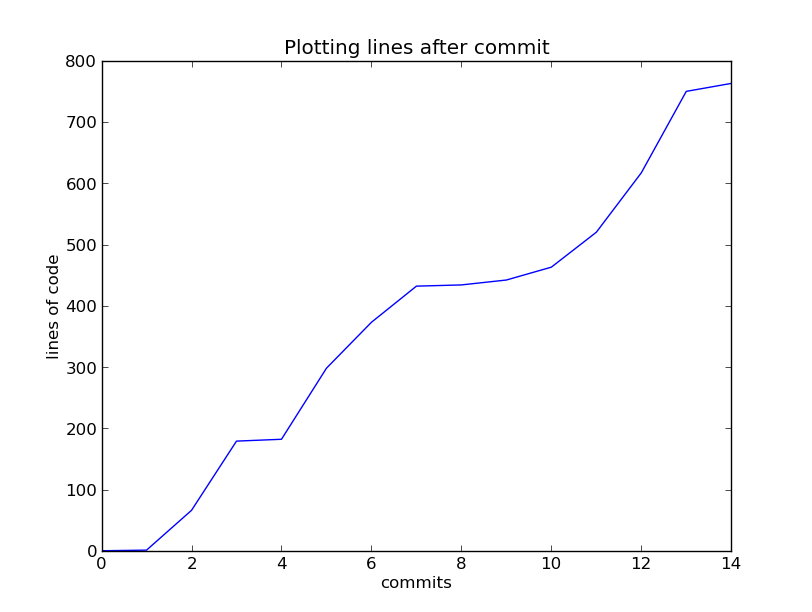

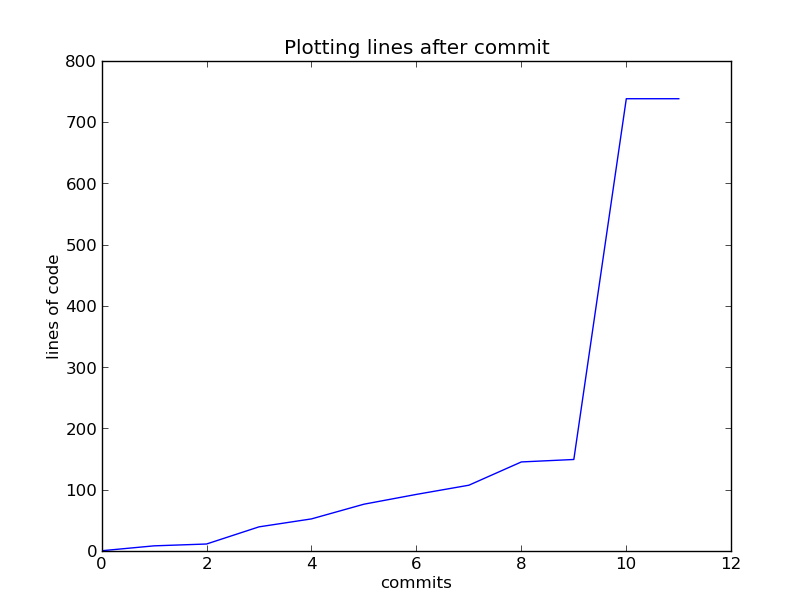

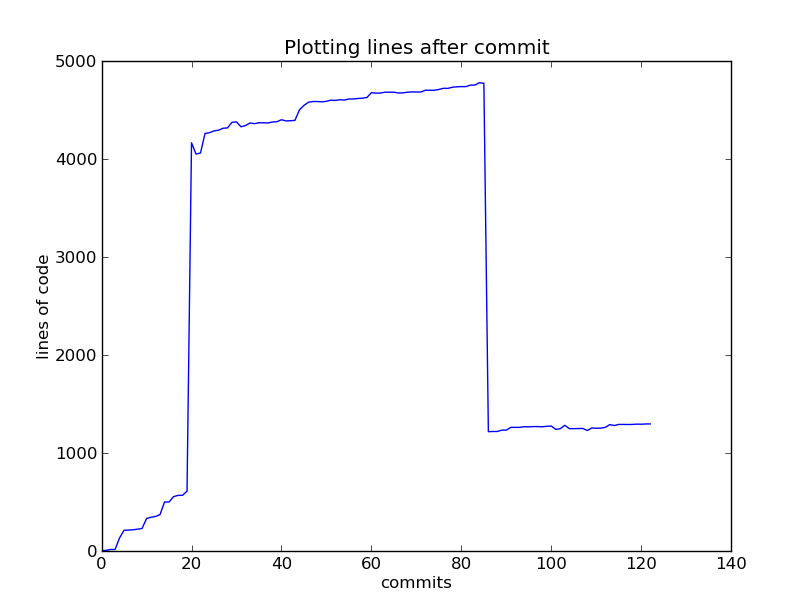

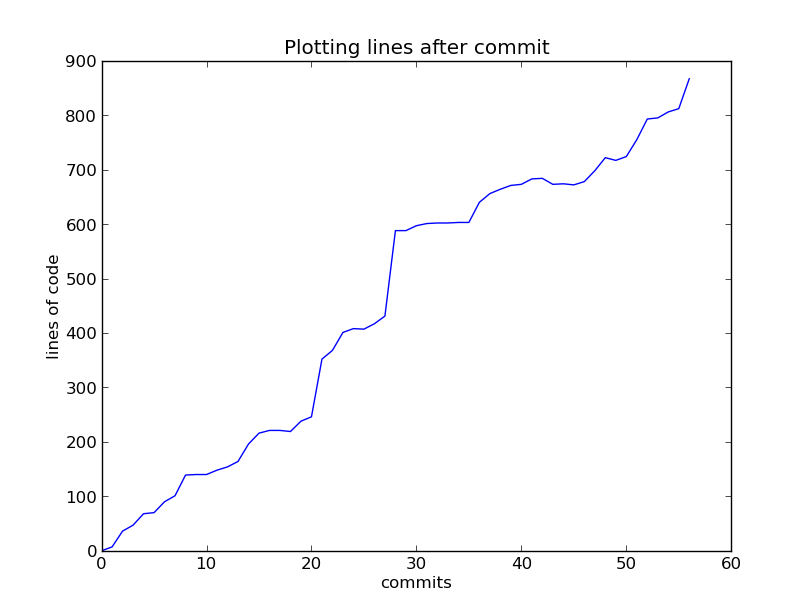

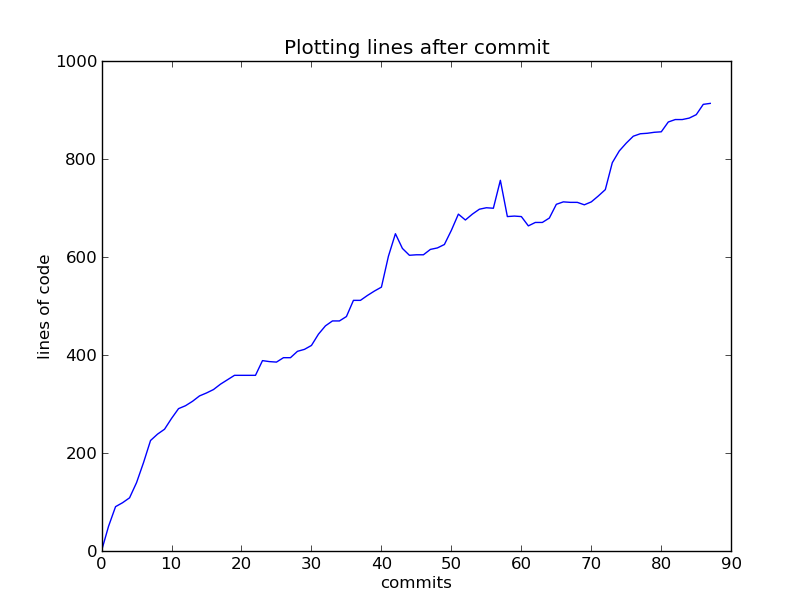

Source Code Control

- For this project source code control is mandatory

- You will have to use the

git- This is somewhat realistic

- Any project you join will likely already have some form of source code control set up which you will have to learn to use rather than any system you might already be familiar with

- See the git homepage

Source Code Control

- The practical is not looking for you to become an expert in

git - You will not need to be able to perform complicated branches, merges or rebasing

- This is, afterall, an individual practical

- What is key, is that your commits are appropriate:

- Small frequent commits of single units of work

- Clear, coherent and unique commit messages

Getting Started

Do this today.

$ mkdir simulator

$ cd simulator

$ git init

$ editor README.md

$ git add README.md

$ git commit -m "Initial commit including a README"

Code Sharing Sites

- Code sharing sites are a great resource but please refrain from using

them for this practical. This is an individual practical so code sharing

is not allowed. Even if you are not the one benefiting.

- This is a bit of a shame, but again somewhat realistic

- It is at least somewhat likely that in the future you will be unable to publicly share all of the code you produce at your place of employment.

Motivation - Simulators

- It is common in both academic and industrial contexts to author some kind of simulator

- Simulators can save time, money, effort and even lives

- Simulators allow the very low cost running of experiments that might otherwise be infeasible

- However, the catch is that unless the simulator is an appropriate model for the real system under investigation, the results may be worthless

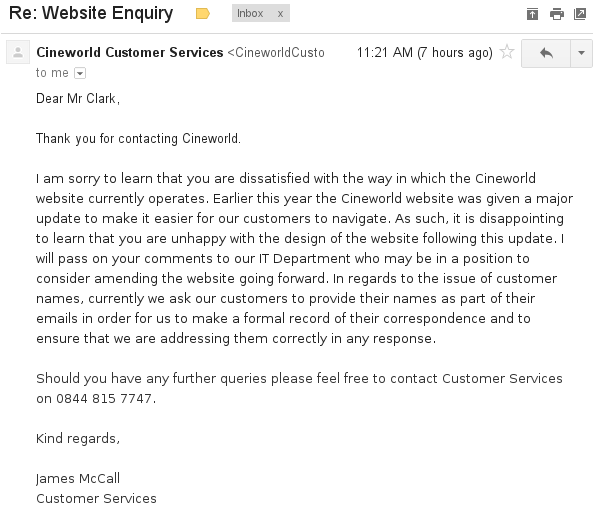

Middle-lane Hogging

A recent BBC news article on the proposed government crackdown on middle-lane hoggers

Middle-lane Hogging

- The government recently announced a ‘crackdown’ on middle-lane hoggers on motorways

- Is this a money-making scheme? Is it a publicity scheme? Is it truly a worthwhile policy?

- Difficult to know. A first step is deciding whether or not middle-lane hoggers cause significant delay and/or danger

- It's difficult to gather data, how would you know how many people are middle-lane hogging?

Middle-lane Hogging

- Even if you could count them, how could change this number?

- Even if you could change (or wait for them to change) how could keep all other conditions the same?

- With simulation it's possible to do both

- Hence with simulation this is the first step towards answering the question of how much middle-lane hoggers cost

Why Simulate Buses?

- City based transport is a huge problem in many parts of the world

- Different people wish for different outcomes:

- Passengers do not wish to wait long for a bus, they hope buses are not too full

- Bus companies do not like empty buses, and would rather run as few as possible (whilst still having the same number of passengers)

- Citizens wish for less congestion and pollution

Why Simulate Buses?

- Some of these are complementary some are contradictory

- With simulation we can try out different policy ideas and see which desires are affected

- Only recently however have we begun to be able to obtain large amounts of related data: times, queues, passengers etc.

- In this practical we will be interested in bus queues at bus stops

Why care about Bus Queues?

- In this practical we are mostly going to be focused on the queue of buses at each bus stop

- NOTE that is the queue of buses and not the queue of passengers

- The queue of passengers at a bus stop is almost irrelevant

- Provided a passenger does get on the next bus, it doesn't really matter when, and the whole queue is dequeued at more or less the same time

- However, if a bus arrives at a bus stop, only to find a previous bus currently using it (to board and disembark passengers) the new bus is stuck waiting doing nothing productive

- This is bad, for pretty much every player in the game

Why care about Bus Queues?

- One possibility is to change the charging model

- It takes time for passengers to board the bus because they all have to pay the driver

- Alternatively we could move to a pre-pay scheme, with inspectors that check people have valid tickets and dispense fines for offenders

- Or simply have an extra conductor on every bus who deals with payment

- In order to evaluate these possibilities we first need to work out how much of a problem is bus queueing

Your Simulator

- Your simulator will be a command-line application

- It will accept a text file with a description of the input network

- This text file specifies, the routes, buses, rates and other entities required to simulate a given bus network

- It should output a list of events which occur

- The strict formats for both input and output are described in the coursework handout

- In the second part you will analyse the sequence of events to obtain statistics about the input network

Simulation Algorithm

The underlying simulation algorithm is itself quite simple:

WHILE {time ≤ max time}

Choose an event and time for it based on the current state

Update the state based on the event

ENDWHILE

Simulation Algorithm

The underlying simulation algorithm is itself quite simple:

WHILE {time ≤ max time}

From the current state calculate the set of events which may occur

total rate ← the sum of the rates of those events

delay ← choose a delay based on the total rate

event ← choose probabilistically from those events

modify the state of the system based on the chosen event

time ← time + delay

ENDWHILE

Simulation Algorithm

WHILE {time ≤ max time}

...

delay ← choose a delay based on the total rate

...

ENDWHILE

- To choose a delay we sample from the exponential distribution

- I'll say more about this later, but for now it can be done by:

- −(mean) ∗ log(random(0.0, 1.0))

- Where mean is the average delay, which is the reciprocal of the total rate

Simulation Algorithm

WHILE {time ≤ max time}

...

event ← choose probabilistically from those events

...

ENDWHILE

- Similarly this means with respect to the rates of those events

- So if two events a and b are enabled at rates 2.0 and 1.0 respectively, then:

- Choose in such a way that a is twice as likely as b to be chosen

Components of the Simulation

- Input network description:

- Stops

- Routes

- Roads

- Dynamic state components:

- Buses

- Passengers

Components of the Simulation

Stops

- Stops have a queue of buses

- And a set of passengers waiting to board buses which pass through the stop

- Passengers can only board the bus at the head of the queue

Components of the Simulation

Routes

- Routes consist of a sequence of stops

- Routes are implicitly circular in that the next stop after the last stop is the first stop

Components of the Simulation

Roads

- Between any two stops which occur adjacently on at least one route there is a road

- Including between the last and first stops of each route

- Each road has an average rate at which buses can traverse it

- We simplify things by saying buses may traverse a road at the same speed regardless of how many buses are on that road

Components of the Simulation

Buses

- Each bus is associated with exactly one route, but there may be many buses associated with that route

- Each bus has a number unique among the buses which traverse the same route

- Hence a bus can be uniquely identified by its route and number

- The bus 31.4 is the fifth bus on route 31

- Each bus has an associated capacity

Components of the Simulation

Buses

- A bus is always either at a stop or on a road between stops

- At a stop it might not be at the head of the queue but behind other buses

- A bus should not leave a stop if there are passengers wishing to board or disembark from it

- A bus may leave a stop if there are waiting passengers if:

- The bus is full, and

- No passenger on board wishes to disembark

Components of the Simulation

Passengers

- Each passenger has an origin stop and a destination stop

- At any one time a passenger is either waiting at a stop or on board a particular bus

- New passengers enter the simulation at any time at a specified rate

- New passengers are randomly assigned to origin and destination stops but it must be a valid route

Components of the Simulation

Events

- Your simulator will produce a sequence of events

- A bus may arrive at a stop

- A bus may leave a stop

- A passenger may board a bus

- A passenger may disembark from a bus

- A new passenger may enter the simulation at a particular stop

Components of the Simulation

Events

- Your simulator will produce a sequence of events looking like:

Bus ‹bus› arrives at stop ‹stop› at time ‹time› Bus ‹bus› leaves stop ‹stop› at time ‹time› Passenger boards bus ‹bus› at stop ‹stop› with destination ‹stop› at time ‹time› Passenger disembarks bus ‹bus› at stop ‹stop› at time ‹time› A new passenger enters at stop ‹stop› with destination ‹stop› at time ‹time›

Components of the Simulation

Events

- In reality of course you will replace the ‹bus›, ‹stop› and ‹time› parts with real values:

Bus 1.2 leaves stop 3 at time 99.498 Bus 1.2 arrives at stop 4 at time 99.692- This is valid output in the sense that it is formatted correctly

- It may be invalid for other reasons, for example route 1 may not pass through stop 3

Part One and Part Two

- For part one, you need only have a working simulator

- For part two, there are additional requirements:

- Output of analysis, such as average number of queued buses

- Experimentation support, varying rates to see how those affect the network

- Parameter Optimisation, finding the best rates

- Validation, checking that the input is valid

- These are all specified in the coursework handout

Coursework Handout

- The above is a brief summary of the major components of the simulation

- It is no substitute for reading the coursework handout

- Available at: www.inf.ed.ac.uk/teaching/courses/cslp/coursework/cslp-2013.pdf

Definitions

- In the requirements I stated that your simulator will be a:

- stochastic,

- discrete event, discrete state,

- continuous time

- I will now define these terms

I finished the first lecture here.

Stochasticity

- Don't worry, it essentially just means “non-deterministic”

- This means that if you run your simulator more than once you might not get the same results

- This also means that you can use your simulator to obtain some statistics

- Remember, these are statistics about the model:

- You hope that the real system exhibits behaviour with similar statistics

Discrete Events, Discrete State

- It is possible to have discrete events and continuous state or vice-versa

- But is common that either both are discrete or both continuous

- This means that each event either takes place or it does not, there is no aggregation of multiple events

- This generally means that the state could be encoded as an integer

- Usually it is encoded as a set of integers, possibly coded as a different data types

- This means there is no ‘fluid-flow’

- An entity, such as a person, is in a particular place, and cannot be divided up into fractions of a person in multiple places at once

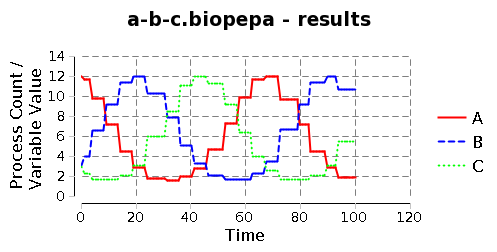

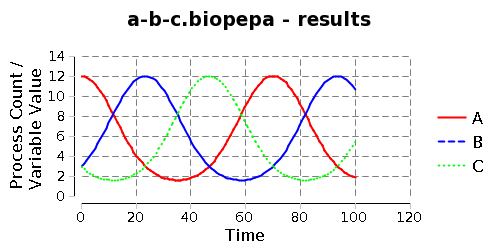

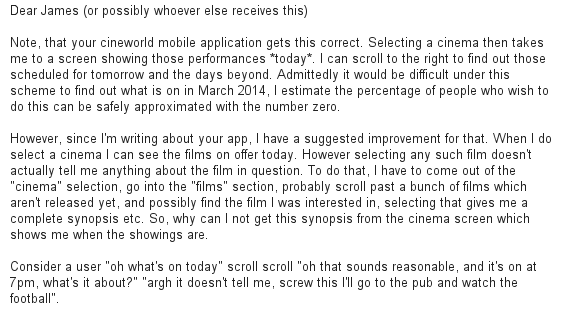

Discrete State vs Continuous State

Continuous Time

- Some simulations use a discrete number of time points:

- Days, Weeks, Months, Years

- Can also be logical time points:

- Moves in a board game

- Communications in a protocol

- These would be examples of discrete time simulators

- Your task is to write a continuous time simulator

- An event could therefore happen at any particular time

The Exponential Distribution

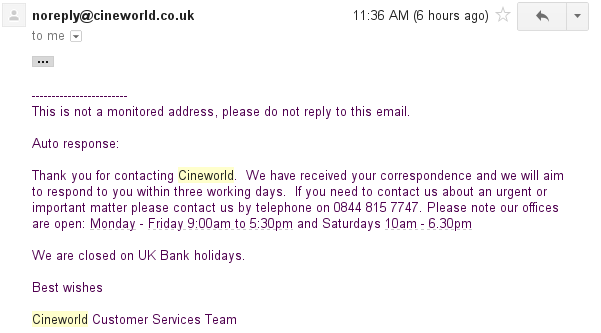

- Both graphs describe probability X at time x related to an event which occurs at a rate of λ

- The left graph depicts the probability density function

- The right graph depicts the cumulative distribution function

The Exponential Distribution

- The PDF is given by: F(x,λ) = λe-λx ∀ x > 0

- Describes the relative likelihood that an event with rate λ occurs at time x

- A time point is infinitesimally small

- The integral of this gives the probability that it occurs within two time bounds

- But you can largely ignore all this

The Exponential Distribution

- The CDF is given by: F(x,λ) = 1 - e-λx ∀ x > 0

- So if something happens at a rate of 0.5 per unit of time, then the probability that we will observe it occurring within 1 time unit is: F(1, 0.5) = 1 - e0.5*1 = 0.393

- The exponential distribution has a couple of excellent properties for the use of simulation

The Exponential Distribution

- The mean or expected value is given by the reciprocal of the rate parameter

- In plain English this means that if something occurs at rate r then we can expect to wait time units on average to see each occurrence

- If something occurs 7 times per week, you can expect to wait of a week (or a full 24 hours) on average between each occurrence

The Exponential Distribution

- Even better it is memoryless

- Formally:

Pr(X > s + t | X > s) = Pr(X > t)

s, t > 0 - Less formally: The time that we can expect to wait for the next occurrence of some (exponentially distributed) event, is unaffected by how long we have already been waiting for it

- In the 7 times a week example, if it has been 24 hours since the last occurrence, the expected additional time I have to wait is still 24 hours

- A quick note, don't confuse these two properties:

- Correct Pr(X > 100 | X > 80) = Pr(X > 20)

- Incorrect Pr(X > 100 | X > 80) = Pr(X > 100)

The Exponential Distribution

Memorylessness

- Why is this so great?

- During simulation, the simulator can choose an event based on the current rates of possible events

- Those rates are based on the current state of the simulation

- As a result of firing that event, the global state of the simulation changes

- However local states may not have changed, in our case for example there may still be two buses at stop 8

- When we choose the next event, we can simply re-calculate the rates of possible events based on the new state of the simulation

- We need not remember how long a particular event has been enabled for

Your Simulators

- Will be Discrete event simulators

- Will be Discrete state simulators

- Will be Continuous time simulators

- Will make use of the exponential distribution

Coursework Handout

- Do not forget to read the coursework handout

- Available at: www.inf.ed.ac.uk/teaching/courses/cslp/coursework/cslp-2013.pdf

Any Questions?

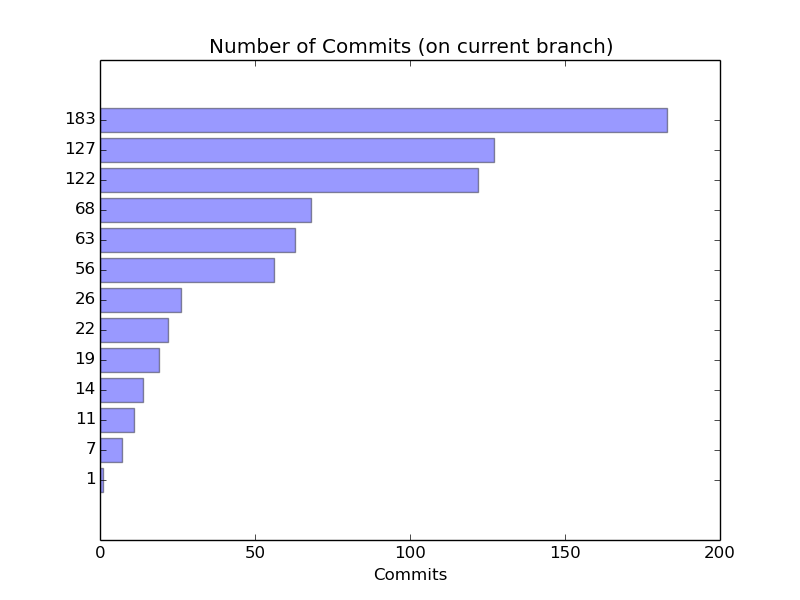

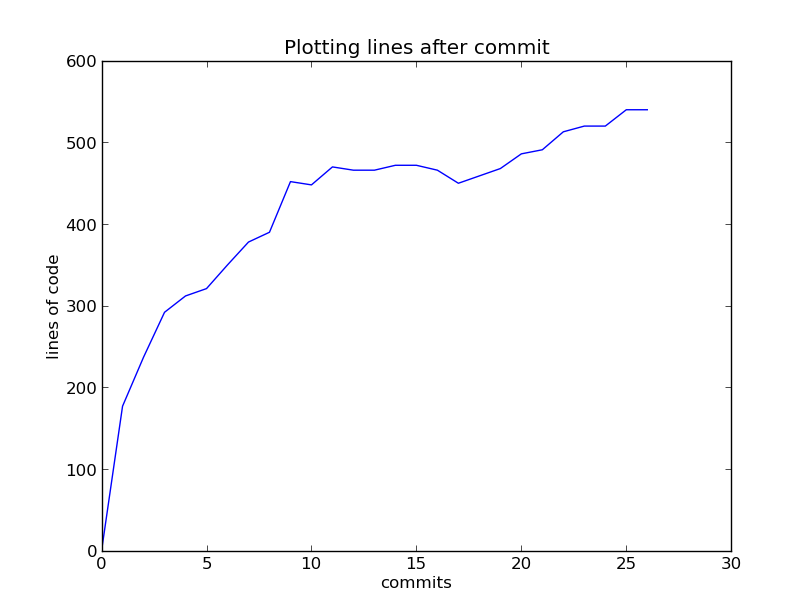

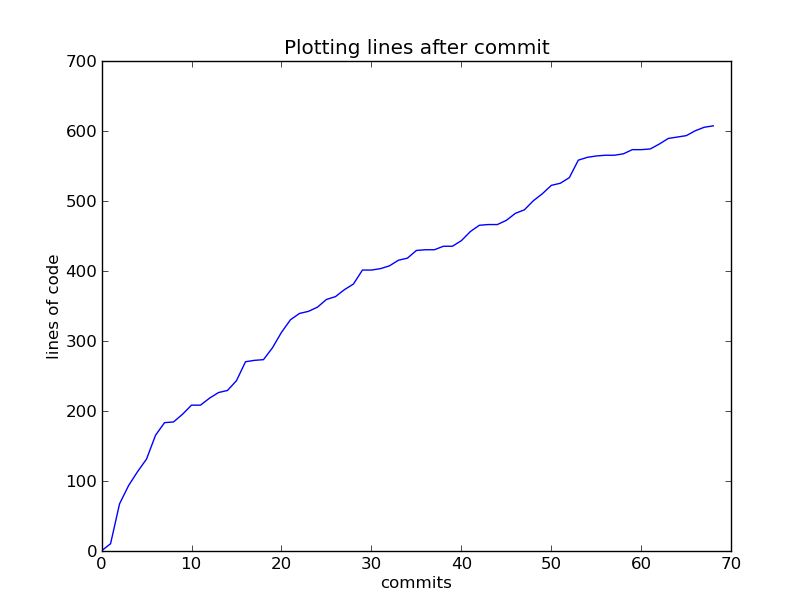

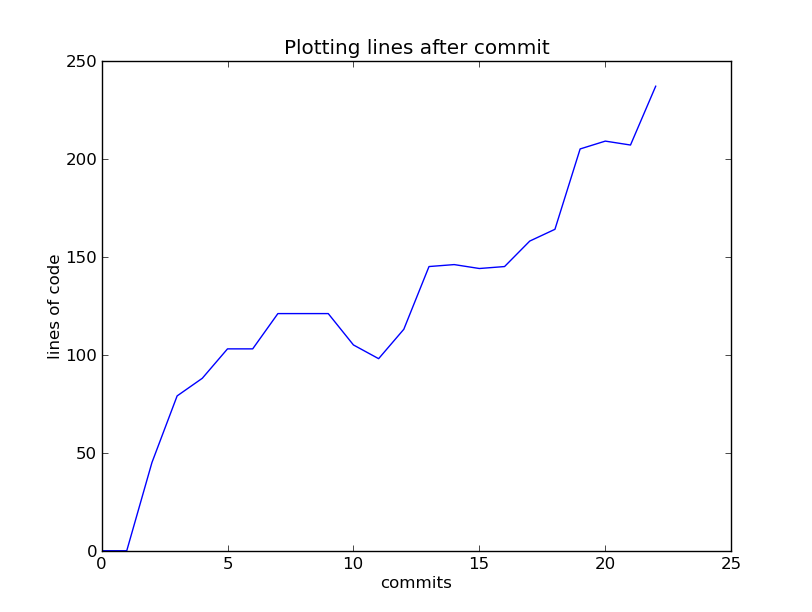

Source Code Control

Computer Science Large Practical

Quick Introduction to SCC

- Source Code Control or Version Control Software is used for two main

purposes:

- To record a history of the changes to the source code that have lead to the current version

- To allow multiple developers to develop the same code base concurrently and merge their changes

- Since this is an individual practical we will concentrate on the first of these two

A common error

/*

* 12/26/93 (seiwald) - allow NOTIME targets to be expanded via $(<), $(>)

* 01/04/94 (seiwald) - print all targets, bounded, when tracing commands

* 12/20/94 (seiwald) - NOTIME renamed NOTFILE.

* 12/17/02 (seiwald) - new copysettings() to protect target-specific vars

* 01/03/03 (seiwald) - T_FATE_NEWER once again gets set with missing parent

* 01/14/03 (seiwald) - fix includes fix with new internal includes TARGET

* 04/04/03 (seiwald) - fix INTERNAL node binding to avoid T_BIND_PARENTS

*/

Basic Source Code Control

As I stated previously the first thing to do is to initialise your repository

$ mkdir simulator

$ cd simulator

$ git init

$ editor README.md

$ git add README.md

$ git commit -m "Initial commit including a README"

The main point

- After each portion of work, commit to the repository what you have done

- Everything you have done since your last commit, is not recorded

- You can see what has changed since your last commit, with the status and diff commands:

$ git status

# On branch master

nothing to commit (working directory clean)

Staging and Committing

- When you commit, you do not have to record all of your recent changes. Only changes which have been staged will be recorded

- You stage those changes with the

git addcommand. - Here I have modified a file but not staged it

$ editor README.md

$ git status

# On branch master

# Changed but not updated:

# (use "git add ‹file›..." to update what will be committed)

# (use "git checkout -- ‹file›..." to discard changes in working directory)

#

# modified: README.md

#

no changes added to commit (use "git add" and/or "git commit -a")

Unrecorded and Unstaged Changes

- A

git diffat this point will tell me the changes I have made that have not been committed or staged

$ git diff

diff --git a/README.md b/README.md

index 9039fda..eb8a1a2 100644

--- a/README.md

+++ b/README.md

@@ -1 +1,2 @@

This is a stochastic simulator.

+It is a discrete event/state, continuous time simulator.

To Add is to Stage

- If I stage that modification and then ask for the status I will be told that there are staged changes waiting to be committed

- To stage the changes in a file use

git add

$ git add README.md

$ git status

# On branch master

# Changes to be committed:

# (use "git reset HEAD ‹file›..." to unstage)

#

# modified: README.md

#

Viewing Staged Changes

- At this point

git diffis empty because there are no changes that are not either committed or staged - Adding

--stagedwill show differences which have been staged but not committed

$ git diff # outputs nothing

$ git diff --staged

diff --git a/README.md b/README.md

index 9039fda..eb8a1a2 100644

--- a/README.md

+++ b/README.md

@@ -1 +1,2 @@

This is a stochastic simulator.

+It is a discrete event/state, continuous time simulator.

New Files

- Creating a new file causes git to notice there is a file which is not yet tracked by the repository

- At this point it is treated equivalently to an unstaged/uncommitted change

$ editor mycode.mylang

$ git status

# On branch master

# Changes to be committed:

# (use "git reset HEAD ‹file›..." to unstage)

#

# modified: README.md

#

# Untracked files:

# (use "git add ‹file›..." to include in what will be committed)

#

# mycode.mylang

New Files

- Slightly tricky,

git addis also used to tell git to start tracking a new file - Once done, the creation is treated exactly as if you were modifying an existing file

- The addition of the file is now treated as a staged but uncommitted change

$ git add mycode.mylang

# On branch master

# Changes to be committed:

# (use "git reset HEAD ‹file›..." to unstage)

#

# modified: README.md

# new file: mycode.mylang

#

Committing

- Once you have staged all the changes you wish to record,

use

git committo record them - Give a useful message to the commit

$ git commit -m "Added more to the readme and started the implementation"

[master a3a0ed9] Added more to the readme and started the implementation

2 files changed, 2 insertions(+), 0 deletions(-)

create mode 100644 mycode.mylang

A Clean Repository Feels Good

- After a commit, you can take the status, in this case there are no changes

- In general though there might be some if you did not stage all of your changes

$ git status

# On branch master

nothing to commit (working directory clean)

Finally git log

- The

git logcommand lists all your commits and their messages

$ git log

commit a3a0ed90bc90e601aca8cc9736827fdd05c97f8d

Author: Allan Clark ‹author email›

Date: Wed Sep 25 10:26:57 2013 +0100

Added more to the readme and started the implementation

commit 22de604267645e0485afa7202dd601d7c64c857c

Author: Allan Clark ‹author email›

Date: Wed Sep 25 10:17:45 2013 +0100

Initial commit

More on the Web

- Clearly this was a very short introduction

- More can be found at the git book online at:

- And countless other websites

The Point

- Don't forget that the point of all this is to record a history of changes to the code

- This allows you to revert to previous versions in order to locate when a bug was introduced

- This can help greatly in locating the source of a bug

- This history of changes also helps other people (including your future self) understand why the code is the way it is

- This is very helpful when you wish to change something without breaking anything

Debugging Help

- Suppose you write some new test input, try it out, and find that it causes your application to crash

do{ revert to previous commit/version

re-compile and re-run your new test

flag = does the program still crash

} while(flag)

Git Blame

Not relevant for this individual project, but when it comes time to do your System Design Project, keep in mind git blame:

$ git blame sbsi_numeric_devel/Template/main_Model.c

352c44 (ntsorman 2010-07-08 14:03:43 +0000 5) #ifndef NO_UCF

352c44 (ntsorman 2010-07-08 14:03:43 +0000 6) #include

352c44 (ntsorman 2010-07-08 14:03:43 +0000 7) #endif

352c44 (ntsorman 2010-07-08 14:03:43 +0000 8)

815381 (allanderek 2011-08-30 13:24:45 +0000 9) #include "MainOptimiseTemplate"

352c44 (ntsorman 2010-07-08 14:03:43 +0000 10)

Committing

- When and what to commit?

- The easy answer is it should be “one unit of work”

- Defining one unit of work is difficult but if you have to use the word ‘and’ to describe it, there is a good chance you have more than one commit there

- Note that my previous example was therefore bad

$ git commit -m "Added more to the readme and started the implementation" [master a3a0ed9] Added more to the readme and started the implementation 2 files changed, 2 insertions(+), 0 deletions(-) create mode 100644 mycode.mylang - It is bad because it is doing two separate things, indicated by the use of the word ‘and’, not because it updates more than one file

Committing

- Your commit should be improving the project. It should be improving

one portion of it:

- The code

- The documentation

- The tests

- And it should be improving that one part in one way:

- Improving functionality

- Improving readability

- Improving maintainability

- Improving performance

XKCD Signal

- XKCD is a popular web comic

- It has an associated IRC channel

- As with many large communities it faced a problem of a large noise to signal ratio

- A large part of the problem is that frequently asked questions are not read and hence re-asked

- Commmon debates are hence frequently re-hashed

XKCD Signal

- In a blog post the xkcd creator outlines a proposal to deal with this

- It has been implemented as the ROBOT9000 bot-moderator

- The rule it enforces is a simple one:

- ”You are not allowed to repeat anything anyone has already said”

XKCD Signal

- You can read about the specifics here

- But some obvious questions arise:

- Question: Isn't this limiting?

- Answer: You're underestimating the versatility of natural language and the sheer number of possible sentences

XKCD Signal

- You can read about the specifics here

- But some obvious questions arise:

- Question: Can't I just game it by tagging extra nonsense on?

- Answer: Yes, but the focus is on dealing with unwitting noise generators. Those who are actively attempting to destroy the conversation can be otherwise banned.

XKCD Signal

- You can read about the specifics here

- But some obvious questions arise:

- Question:What happens if I just want to answer someone with a yes/no?

- Answer: Expand slightly e.g. “I agree, ... because ...”

What has this got to do with SCC?

- A persistent problem is the lack of meaningful commit messages

- “fixed a bug”

- “More work”

- “Fixes.”

- “Updates”

- “big commit of all outstanding changes”

- “commit everything”

- “commit”

What has this got to do with SCC?

- I hope to give some good advice on this writing good commit messages

- But it is notoriously difficult to enforce

- One could easily enforce a minimum length, but this would only solve part of the problem and in some cases would not actually be appropriate

- A sneakier idea; copy the “Do not repeat” rule from XKCD-Signal

- “Do not use a commit message which has been used previously”

Non-repeating Commit Messages

- When I say “used previously” do I mean in the same repository?

- Beginner level: yes, I mean in the same repository

- Advanced level: no, I mean in any repository that exists for any project

- It should not really matter, it is hard to accept that a commit message used for an entirely different project is appropriate for your one

Non-repeating Commit Messages

- Said in a whingey voice: “But I really did just fix a typo in the README”

- You can probably expand on that a little

- However, of course some violations of this rule will be worse than others

- Similarly just because you pass this rule, does not mean you have a useful commit message

- Gaming this by adding superfluous characters is definitely wrong

Non-repeating Commit Messages

- In order to check the advanced level I will need a corpus of repositories

- I might use github for this. You certainly should not be repeating a commit message used for an entirely different repository

- But I will at least check your commit messages against all other repositories submitted for this practical

- Bear in mind, you're all implementing the same requirements

More Advice

- The commit message should be a summary of the actual ‘diff’

- Part of the point of the commit message is so that a reader can avoid looking at the actual ‘diff’

- The reader is looking in the history for a reason. Most likely they are trying to find the source of an issue. Help them.

More Advice

- You should at least make clear the purpose of the commit

- Is it?

- A bug fix

- A feature addition

- A conflict resolution between two branches

- Style enhancements

- On what scale? A single fixed spelling error, or reformating all of the code?

- A refactor of some portion of the code

- Addition of a test

- Updating of documentation

- Optimisation

More Advice

- Even once the purpose is described, try to explain the reason for that purpose

- Some times this will be obvious, for example if the purpose of the commit is to fix a bug

- Even then, you may wish to explain why that is fixable now rather than earlier

- Others, really require an associated why. In particular a refactor.

Summary of the Main Advice

- Small frequent commits. Each commit should do one thing

- Ask yourself is it plausible that you might wish to revert some of the

changes in a commit but not all of them?

- If so, you almost certainly have more than one commit's worth of work

- A person looking through your history is most likely looking for the source of a bug, or trying to figure out why a certain bit of code is the way it is. In either case help them.

- Some people branch for any new unit of work. You should at least branch if you start doing two things at once

Micro Commits

- It is possible to commit too little a portion of work

- But for this practical we will ignore that possibility (unless you're clearly gaming the system)

- Just a note: small style enhancements are usually not too small

- “I just fixed a small typo in a comment, no one could possibly wish to revert to

the code before I fixed the typo”

- Probably not, but what are you about to do?

- Someone may well wish to revert to the code immediately after you fixed the typo

Micro Commits

- If you commit code such that the “build is broken”

it is certainly not an appropriate commit

- If the code fails to compile, or has a syntax error (for dynamic languages)

- If this is the case you are likely committing too little

- Though this could also be caused by over-shooting an appropriate commit

- In other words you have 1 and a half commits worth of work

- Or 2 and a half, or X plus 1/y commits worth of work

Branching

Branching

- This occurs in software development frequently

- In particular, you aim to add a new feature only to discover that the supporting code does not support your enhancement

- Hence you need to first improve the supporting code, which may itself depend on supporting code which may itself require modification

- Branching, is the software solution to this problem that most other projects do not have available to them

- Because it is pretty easy to copy the current state of a project and work on the copy and then merge back in the results if the work is successful

Branching - The Basic Idea

When commencing a unit of work:- Begin a branch, this logically copies the current state of the project

- The original branch might be called ‘master’ and the new branch ‘feature’

- Complete your work on the ‘feature’ branch

- When you are happy merge the results back into the ‘master’ branch

Branching - First Reason

- Mid-way through, should you discover that your new feature is ill-conceived,

- or, your approach is unsuitable,

- You can simply revert back to the master branch and try again

- Of course you can revert commits anyway, but this means you're not entirely deleting the failed attempt

- You can also concurrently work on several branches and only throw away the changes you do not want to keep

Branching - Second Reason

Should you discover that there is some other enhancement required before your proposed enhancement can be delivered:- Create a new branch (let's say ‘sub-feature’) from ‘master’

- This new branch does not contain any of the work you have done on ‘feature’

- Complete your requirements on ‘sub-feature’

- Once you are happy, merge those results with ‘master’

- You can now rebase the ‘feature’ branch which essentially pretends that you created it from ‘master’ after the work done on ‘sub-feature’ was merged

Branching

- It is possible to do these steps retrospectively

- But it is easier to stay organised

- One approach is to have a newly named branch for each feature

- This has the advantage that multiple features can be worked upon concurrently

- Usually each feature branch is deleted as soon as it is merged back into ‘master’

- A more lightweight solution is to develop everything on a branch named ‘dev’

- After each commit, merge it back to ‘master’ you then always have a way of creating a new branch from the previous commit

With Regards to Grading

- Advice about branch and rebasing etc. is worthwhile and may help you

- However, I won't be specifically testing you on it

- The main thing I wish to see is appropriate commits, both the work done in a commit and the commit message

- These can be retroactively “fixed up”

- There is no penalty for this. Though I advise that you attempt to render it unnecessary by keeping organised

External Git Advice

- There are literally millions of web pages offering git support and advice

- Go forth and explore

Any Questions?

Languages

Computer Science Large Practical

Language Choice

- I stated that you were free to choose which ever language you wish

- For anyone who has not yet started this lecture may help you decide

- For those of you who have, it probably is not too late to switch

- In any case it won't do you any harm to justify your choice and/or utilise your choice appropriately

Language Choice

Languages come in many varieties, here are some of the distinctions made:- Compiled vs Interpreted

- Strongly typed vs Weakly typed

- Statically type vs Dynamically typed

- Functional vs Imperative

- Object Oriented vs Classless

- Lazy vs Eager

- Managed vs Unmanaged

For the most part these are independent of each other giving us 27 (128) possibilities

Language Choice

- Before I start though, don't forget

- Despite being labelled large, this is a short term project

- As such, it's okay to choose language X because:

- “I know X better than any other language”

Compiled vs Interpreted

- Many languages will claim to be either a “compiled language” or an “interpreted language”

- The distinction is intended to be simple:

- Either the source code is translated into machine code and then run or:

- An interpreter reads the source code and executes each line of code dynamically

Advantages of Interpreters

- An interpreter is a less complicated piece of machinery to implement than is a compiler

- Interpreters are generally more portable than compilers are re-targetable

- An interpreter also works well as a debugger

Advantages of Compilers

- The interpreter need not be installed on users' machines

- The generated machine code is generally less expensive to run than is interpreting the original source code

- Significant and complicated transformations can be implemented in the

compiler, so even if the above were not true, compiled code should

still be faster

- This is because it represents code which has been automatically optimised

Bytecode

- Many language implementations therefore implement something of a compromise

- The language is compiled to a portable bytecode

- This bytecode is then interpreted on the user machines

- Even this compromise solution is further modified with the use of Just In Time compilers

- This is now so common that the distinction between compiled and interpreted languages is debatable

|

|

Small Rebuttal

- “The compiler can perform expensive automatic optimisations that the interpreter cannot”

- However, one might suggest that such expensive optimisations can be performed at the source code level, hence the interpreter can still benefit from them

- But, whilst some transformations can be performed at the source code level, not all can

- Source to source optimisations are not common. Likely because if efficiency is a large factor, then a compiler is used

Compiled/Interpreted Language?

- There is not really any such thing as a “compiled language” or an “interpreted language”

- There are compiler or interpreter implementations

- A language may have one particularly official implementation

- Interpreters are nearly always implemented via some kind of bytecode

- So we only really have compiler implementations, it is just a question of what that compiler targets, physical machines or virtual bytecode machines

Compiled/Interpreted Implementations

- Ocaml has

ocamlbyteandocamlopt - Java is generally compiled to the JVM, but implementations such as gcc-java exist

- C# and some other languages now target the CLR runtime

- Python is generally interpreted but Cython exists (an optimising static compiler)

Compiled vs Interpreted

Conclusion

- The distinction between compiled and interpreted is one of implementation not languages

- However, some language features lend themselves to one more easily than the other

- But, increased runtime sophistication has meant that the line between compiled and interpreted has become increasingly blurred

- Your language choice should probably not focus too heavily on whether the official language implementation is a compiler or an interpreter

Type Systems

- Languages involve expressions which evaluate to values

- It is possible to give a type to those values

- We can then check that operations use values of an appropriate type

- For example we may check that we are not trying to add

a string to an integer:

3 + "hello" - The types may also determine what the operation is:

- Integer addition:

3 + 2 - Floating point addition:

3.0 + 2.0

- Integer addition:

Type Systems

- Some type systems also give types to statements

- For example some type systems determine what exceptions may be raised by a given command (which may be a sequence of commands)

- Some such type systems oblige the user to declare these exceptions

- For our purposes we will concentrate on the typing of expressions/values

Strongly typed vs Weakly typed

- This is often confused as a distinction between statically and dynamically typed languages but this distinction is quite separate

- One can have static-strong, static-weak, dynamic-strong, dynamic-weak

Strongly typed vs Weakly typed

- Strongly: Objects of the wrong, or incompatible types cause an

error:

3 + "5" = error, as seen in C++, Java, Python, Ocaml

- Weakly: Objects of the wrong, or incompatible types are converted:

3 + "5" = "35"in Javascript3 + "5" = 8in PHP, Perl5, Tcl

Advantages of Strong Typing

- When something goes wrong, the error is produced as soon as it is discovered

- This makes it easier to investigate the source of the error

- Additionally, you are less likely to calculate incorrect results

- Often, incorrect results are worse than no results

Advantages of Weak Typing

Uhm?

Advantages of Weak Typing

- Occasionally completing a computation and obtaining a result is better than obtaining no result

- Even if the result you obtain is wrong

- Displaying a web page wrongly is generally better than not displaying it at all

- You can implement this in either a strongly or weakly typed language but it is easier in a weakly typed one

Strong vs Weak Typing

Conclusion

- You're writing a simulator, do you think that any result, no matter how incorrect, is better than no result?

- Most of the advice I will give you here is of the annoyingly non-committal variety

- In this case though, unless there are rather compelling reasons to decide otherwise: use a strongly typed programming language

- But do not confuse weak typing with other type system distinctions, such as nominative, structural, duck typing

Statically typed vs Dynamically typed

- A statically typed language specifies that the typing of expressions should be done before the program is run

- A dynamically typed language specifies that the typing of values should be done whilst the program is run

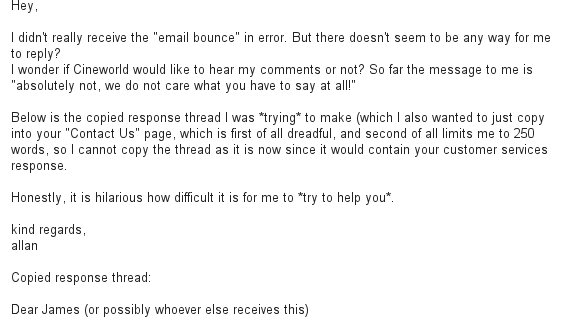

Statically typed vs Dynamically typed

Source: TIOBE language index

Statically typed vs Dynamically typed

- One reason to type expressions is to aid compilation

- Recall the typing of the operands to an addition operator meant that we could determine what kind of addition is required

- We might also need to know the size of the computed value so that we know where it might be stored

- Obviously, if the purpose of the types is to aid compilation, the type checking will have to be done statically

- More importantly the typing of expressions and values is done to avoid the computation of incorrect results

Advantages of Static Typing

- Type errors are caught before you attempt to run the program

- This means for example that type errors should not occur mid-run on a user's machine

- Even during development, perhaps you have a program that:

- takes seconds to compile,

- minutes/hours to run

- and a type error in the final printing of the result

- Using static types you will be alerted to the type error after the compile

- Using dynamic types you will be alerted at the end of a first run

Advantages of Static Typing

- You may be releasing a library, which isn't “run”

- Of course you should have a test suite with 100% code coverage

- That does not always mean the tests are particularly useful

- What you should have and what you do have are not always the same

- Static typing gives you some kind of guarantee for “free”

Advantages of Dynamic Typing

- Static type checking is necessarily conservative

- This means it will reject some programs that ultimately would not, when run, have resulted in a type error

- During development you can avoid type checking code you know will not be run, this is a subtle point

Example of Subtle Point

Suppose you have a method to create some data type:

void create_character(int initial_health){ ... }

void create_character(int initial_health, Gender gender){ ... }

Example of Subtle Point

Unfortunately calls to this method are spread throughout your code

void restart_game (...){

... create_character(100); ... }

void respawn(...){

... create_character(80); ... }

void duplicate_cheat(...){

... create_character(100); ... }

Example of Subtle Point

With a static compiler you will have no choice but to update each call anyway

void restart_game (...){

... create_character(100, character.current_gender); ... }

void respawn(...){

... create_character(80, character.current_gender); ... }

void duplicate_cheat(...){

... create_character(100, character.current_gender); ... }

Example of Subtle Point

Worse, you might not yet have reasonable values so you just do this:

void restart_game (...){

... create_character(100, None); ... }

void respawn(...){

... create_character(80, None); ... }

void duplicate_cheat(...){

... create_character(100, None); ... }

Example of Subtle Point

void restart_game (...){

... create_character(100, None); ... }

void respawn(...){

... create_character(80, None); ... }

void duplicate_cheat(...){

... create_character(100, None); ... }

create_character

Example of Subtle Point

Some languages have optional parameters or default arguments:

void create_character(int initial_health, Gender gender=Female){ ... }

Two Competing Forces

- When programmers learn static type systems it often feels like you are getting more program correctness for free

- It seems as though it is not quite for free, and that the static type system does hamper productivity in the short term

- It also seems likely that static type systems can save on some kinds of work in the future

- The question is, does short term loss in productivity repay for itself with long term increase in productivity?

Philosophy of Typing

- Just as I suggested that a language can be neither a compiled nor interpreted language it is also something of an implementation issue as to when typing is performed

- However, there is generally a type system attached to each language

- Some type systems are very difficult or even impossible to fully check statically

- Some type systems deliberately ensure that it is possible to statically type check the language

Soft Typing

- Soft typing is something of a compromise between static and dynamic typing

- The idea of soft typing is to statically type as much of the program as is possible

- Where the type system cannot determine that an expression or operation will never cause a type error, it inserts a run-time check

- In this sense a dynamic type system is an extreme example of a soft-typing system that is not very good at determining any expressions which will never produce a type error

Soft Typing

- In a sense many of our supposedly static type systems are in fact soft type systems which need few checks

- Commonly, array indexes are not statically checked to be within the bounds of the size of the array

- Instead a dynamic run-time check is inserted for this purpose

- Additionally cast operations are generally checked at runtime as they cannot be statically checked to be valid

Static Analysers

- When a type is not used by the compiler, then ultimately the static type checker is simply a static analyser

- We can deploy many static analysers

- We can also, omit to run any or all of them during a development run

- Personally, I'm a big fan of static analysers

- Static type systems are no exception, but I think they should be optional

Statically Typed vs Dynamically Typed

Conclusion

- The distinction between statically typed and dynamically typed is in theory one of implementation, but in practice one of language

- The distinction though is softer than some may suggest

- It is more of a gradient than a dichotomy

- For this project, either kind of type system will be fine

- But, whichever choice you make, I recommend making use of additional static analysers

- And, whichever choice you make, you should write some tests

Functional vs Imperative

- This distinction is somewhat disputed

- The main idea is that a functional language computes values of expressions, but does not modify state

- An imperative language is simply a non-functional language, that is, one which allows/encourages the programmer to directly modify state

Functional vs Imperative

- It turns out, that a lot of programs involve a lot of functional computation, with a very small amount of state modification

- Hence, the term functional is often relaxed to include those languages that discourage state modification

- More importantly, such languages, encourage

declarative code.

- That is, code which does not modify the state

Functional Programming

- I tend to describe any language with proper support and syntax for nested, higher-order functions to be functional

- A higher-order function is simply one that:

- Takes one or more functions as parameters

- Returns a function as a result

- In general treating functions as any other kind of value is known as providing first class functions

- If the language also allows nested functions which can access the scope of containing functions, the implementation requires function closures

- The provision of nested, higher-order functions usually encourages declarative programming

Functional Programming

- Languages which entirely forbid state updates I describe as strictly functional

- Even this is a little confusing because some people describe eager evaluation as strict evaluation

- So I might also say a pure functional language or simply a pure language

Functional Advantages

- The key advantage of a functional programming language is the hugely pretentious phrase “referential transparency”

- I'm not sure, but I suspect this phrase is one reason functional programming languages are not more widely adopted

- It means, that an expression evaluates to the same result regardless of the time, or state, in which it is evaluated

- In particular invoking a function:

some_fun(args)with the same argumentsargswill always produce the same result

Functional Advantages

- This makes testing and/or reasoning about the correctness of code much easier

- In theory, it means code is more re-usable

- This is debatable, and not, to my knowledge, demonstrated (either way) satisfactorily

- But it's certainly plausible

Functional Advantages

In theory, this additionally allows for some interesting compiler optimisations, consider the following double transformation over a list of items:

some_list = map f (map g original_list)

Functional Advantages

some_list = map f (map g original_list)

fg = f . g

some_list = map fg original_list

f . g is the composing of two functions together. This is

faster because it only loops over the list once.

Functional Advantages

some_list = map f (map g original_list)

fg = f . g

some_list = map fg original_list

f does not modify state which g

references or vice-versa. In a functional language this is both, more likely and

easier to automatically check.

Functional Advantages

fg = f . g

some_list = map fg original_list

- Similarly if you have multiple processors, you could begin the second map operation in parallel as soon as the first transforms the first item.

- Again, only if you can determine that there are no state dependencies.

- In general parallel programming can in theory be advanced by limiting state updates

Imperative Advantages

- With no state modifications all information required by any function must be passed in as an argument

- This can arguably make the code more complicated

- Worse, it can require a large refactoring in order to make a relatively simple change

Imperative Advantages

- However, recall that my definition of a functional programming language did allow for state modifications.

- It only required nested, higher order functions

- It's hard to argue that not providing these is an advantage to the programmer

- One could argue that the implementation (of the language) is simpler

- It is debatable, but one can certainly argue that the implementation of nested higer-order functions, requires a performance degradation

- Functions are more heavyweight and hence more expensive to invoke

Functional vs Imperative

Conclusion

- You could certainly use either a functional or an imperative language for this practical

- You're probably best off with whichever you prefer

Object Oriented vs Classless

- Given my glowing recommendation for higher-order functions why are they not more commonly used?

- Classes, or objects, allow for a similar abstraction

- An object is really a collection of state together with operations over that state

Typical Class Definition

class ClassName (ParentClass){

classmember_1 = 0;

classmember_2 = "hello";

void class_method_1(int i){

self.classmember += i;

}

void class_method_2(String suffix){

print_to_screen(self.class_method_2);

print_to_screen(suffix);

}

}

Object Oriented Languages Popularity

| Category | Ratings Sep 2013 | Delta Sep 2012 |

|---|---|---|

| Object-Oriented Languages | 56.0% | -1.1% |

| Procedural Languages | 37.3% | -0.9% |

| Functional Languages | 3.8% | +0.6% |

| Logical Languages | 3.0% | +1.3% |

Advantages of Object-Oriented

- Surprisingly debatable

- Most people agree that there is some value in object-oriented programming

- But when asked to give concrete advantages, most offer:

- Vague perceived benefits, with no logic connecting to OOP:

- Advances reuse

- Better models the real world

- Clear benefits but which are not unique to OOP:

- Polymorphism (fancy word for a specific kind of generality)

- Encapsulation (fancy word for hiding/abstraction)

- Vague perceived benefits, with no logic connecting to OOP:

Advantages of Classless

- No one really argues that the provision of classes is inherently destructive

- In a similar way to higher-order functions, having the ability to utilise classes does not do any harm if you never use them

- However, once the temptation is there, it's easy to go class crazy

- But such arguments are not arguments against the use of an object-oriented language, so much as an argument for careful use of classes

Object Oriented vs Classless

Conclusion

- By all means choose an object-oriented language

- There is little reason not to, but pure languages often do not

have a notion of an object

- This is for good reason and should not put you off choosing a pure language

- If you do choose an object-oriented language, use your classes with care

- Classes are just one way of organising source code.

- There are others which are just as effective

- Using an OOP language will not magically organise your source code for you

Lazy vs Eager

Lazy vs Eager

- Suppose we attempt to enforce the policy: everyone leaves the seat down

- What if two men (or the same man twice) use the toilet in succession

- This means the first man unnecessarily put the seat down only for the second man to put it back up again

Lazy vs Eager

- Suppose we attempt to enforce the policy: everyone leaves the seat up

- Now if two women (or the same woman twice) use the toilet in succession

- This means the first woman unnecessarily put the seat up only for the second woman to put it back down again

- “hugely” wasteful

Lazy vs Eager

- A more efficient strategy: leave the seat as it is

- If two people of the same gender visit successively no unnecessary work is done

- Whenever there is a gender switch the second person must change the state of the seat

- But that would otherwise have been done by the previous visitor anyway

Lazy vs Eager

- The first two inefficient strategies are examples of eager evaluation

- The final more efficient strategy is an example of lazy evaluation

- Essentially lazy evaluation is the policy of only ever computing a value when it is required

Lazy vs Eager

- Remaining in the household, this is equivalent to only washing dirty dishes when you are about to use them

- In this case, you do the same amount of washing up, it is only a question of when

- Unless you have some dish that is used exactly once

- But note, the lazy policy requires more space next to the sink

Lazy vs Eager

- Laziness is awesome

- But, there are some significant caveats to that

- I'll try to describe why I think laziness is an excellent feature

- But then also why it is not widely available

I stopped here at the lecture on October 4th

A common argument

You can compute infinite values

primes = [ x | x ‹- [2..], is_prime x ]

get_prime x = primes !! x

Consider

Imagine you are writing software to statically analyse a programming language. You can imagine many such analyses, and you wish that the user can turn on or off various analyses as they see fit. Suppose you first attempt to check if there are any calls to methods which are undefined:

if (analyse_called_names){

method_names = gather_method_names(..)

called_names = gather_called_names(..)

for name in called_names{

if name ∉ method_names{

report_error()

}

}

}

Being Concise

I'm going to keep everything on one slide so I'll pretend we have some set based operators:

if (analyse_called_names){

method_names = gather_method_names(..)

called_names = gather_called_names(..)

if (called_names ⊈ method_names){

report_error () }

}

Adding A Second Analysis

Now you wish to check if there are any methods which are defined but never used. Note that this might be considered more of a warning than an error:

if (analyse_called_names){

method_names = gather_method_names(..)

called_names = gather_called_names(..)

if (called_names ⊈ method_names){

report_error () }

}

if (analyse_uncalled_names){

method_names = gather_method_names(..)

called_names = gather_called_names(..)

if (method_names ⊈ called_names){

report_error () }

}Computing Sets Twice

This means if the user wants both analyses we are computingmethod_names and called_names twice.

if (analyse_called_names){

method_names = gather_method_names(..)

called_names = gather_called_names(..)

if (called_names ⊈ method_names){

report_error () }

}

if (analyse_uncalled_names){

method_names = gather_method_names(..)

called_names = gather_called_names(..)

if (method_names ⊈ called_names){

report_error () }

}

Only Compute What We Need

Any attempt to only compute the stuff you need gets complicated:

if (analyse_called_names || analyse_uncalled_names){

method_names = gather_method_names(..)

called_names = gather_called_names(..)

if (analyse_called_names && called_names ⊈ method_names)

{ report_error() }

if (analyse_uncalled_names && method_names ⊈ called_names)

{ report_error() }

}

analyse_(un)called_names twice.

A Third Analysis

Let's add another analysis that checks if method names overlap class names:

if (analyse_called_names || analyse_uncalled_names || analyse_class_names){

method_names = gather_method_names(..)

called_names = gather_called_names(..) // Hmm?

if (analyse_called_names){

if (called_names ⊈ method_names) { report_error() }

}

if (analyse_uncalled_names){

if (method_names ⊈ called_names) { report_error() }

}

if (analyse_class_names){

class_names = gather_class_names(..)

if (method_names ∩ class_names != {}){ return error() }

}

}

A Third Analysis

Let's add another analysis that checks if method names overlap class names:

if (analyse_called_names || analyse_uncalled_names || analyse_class_names){

method_names = gather_method_names(..)

if (analyse_called_names){

called_names = gather_called_names(..)

if (called_names ⊈ method_names) { report_error() }

}

if (analyse_uncalled_names){

called_names = gather_called_names(..)

if (method_names ⊈ called_names) { report_error() }

}

if (analyse_class_names){

class_names = gather_class_names(..)

if (method_names ∩ class_names != {}){ return error() }

}

}

Worse

- Imagine I now add another analysis that uses the set of

class_namesbut not either of the other two - We can use a thunk pattern, but that is still complicating the code a little

Lazy Implements Thunk Anyway

In a lazy language I just do this:

method_names = gather_method_names(..)

called_names = gather_called_names(..)

class_names = gather_class_names(..)

if (analyse_called_names && called_names ⊈ method_names){

report_error() }

if (analyse_uncalled_names && method_names ⊈ called_names){

report_error() }

if (analyse_class_names && method_names ∩ class_names){

report_error() }

Advantages of Eager Evaluation

- So why are not all languages lazy?

- Lazy evaluation removes the predictability of when an expression may be evaluated

- Hence if your language allows side effects, lazy evaluation does not really work

- So lazy evaluation only really works together with a purely functional language

- Haskell is lazy, Ocaml is not

Lazy vs Eager

Conclusion

- There is no reason really to choose a lazy or eager language for this practical

- In any case your choice is more or less made up for you by your other choices

- If you like Haskell, Clean, Miranda or Hope, you will compute values lazily, most other languages are eager

Managed vs Unmanaged

- Automatic memory management, sometimes called garbage collection

- Without this, whenever you need to store a value in memory, you must first ask for the space in memory

- When a value in memory is no longer useful, you should give back the space in memory that it used

- If you let the last reference to a value go out of scope, without freeing up the associated memory, you will not ever do so, hence you have a space-leak

- Unfortunately, if you give back the memory too soon, you may subsequently try to reference the value, this may cause a segmentation fault

Advantages of Memory Management

- You need not manage the memory yourself, this is hugely liberating

- I believe there is much gained productivity associated with:

- Object Oriented Languages

- Dynamically/Statically typed languages

- Lazy languages

- Reflection

- I'm not saying these things do not also improve productivity

Advantages of Memory Management

- I can say

f(g(x))and not have to think about whether the intermediate result produced bygneeds to be cleaned-up - I can

returnfrom anywhere I like in the middle of a method, without worrying about all paths re-joining to free-up used memory- Honestly: “Only One Return” was a common coding rule

- Sometimes called “Single Entry, Single Exit”

Advantages of Manual Memory Management

- Nostalgia

- In theory you can implement manual memory management more efficiently

- This is a bit debatable

- In any case, the improved productivity gained through the use of an automatic garbage collector, can be put to use in optimising the rest of your code

- In particular better algorithms rather than faster implementation of the same one

Advantages of Manual Memory Management

- Predictability, it can be difficult to know when the garbage collector might run

- So real-time systems which must respond to incoming external events may suffer

- But there is much research into automatic garbage collection, and real-time garbage collectors do exist

Managed vs Unmanaged

Conclusion

- Choose a managed language

- If you are only familiar with an unmanaged language either:

- learn a new managed one or

- use a conservative garbage collector

- Don't complain that I haven't given you any concrete advice

Other Distinctions

- Low-level vs High-level

- This is mostly a distinction made from a combination of those above

- Significant Whitespace or not:

- Personally I love it, but it is syntax it does not matter

- If it bothers you that much you can always write a parser for a different syntax

- Scripting vs Systems:

- If you must distinguish these you can interoperate between them

What is the Best Language?

Main Conclusion

- In general, it is less what the language provides and more what libraries are available in that language

- This practical however, does not require the use of any major libraries

- Hence you are somewhat more free to choose based on the criteria I have discussed above

- Good Luck!

Summary and Conclusions

- My hard advice can be summarised as:

- Choose a strongly typed language

- Choose a language with automatic memory management

- It may be a useful thing to report in your README why you have chosen the language you have

- A perfectly valid reason is:

- “Language X is my favourite language which I know better than all others”

Any Questions?

Structure & Strategy

Computer Science Large Practical

This Lecture

- In this lecture I will try to give some helpful advice about the structure of your source code and your stategy

Overall Structure

- I do not wish to give too much advice since I do not want a set of near identically structured solutions

- Part of the practical is structuring it yourself. However, it seems

likely you will want at least the following components:

- A parser

- A representation of the state of a simulation

- Operations over that state

- The simulation algorithm

- Something to handle output

- Something to analyse results

- A test suite

Overall Structure

- I call these components, I do not call them:

- Classes, Instances, Objects, structs

- Interfaces, signatures, prototypes, aspects

- Methods, functions

- Modules, packages, functors

- Types, type classes

- This is not because I did not specify the source language

- It is because they could reasonably be any of these things

- It is up to you to decide what is most appropriate

Some Obvious Decisions

- Do you want to parse into some abstract syntax data structure

and then convert that into a representation of the initial state

- Or you could parse directly into the representation of the initial state

- Do you wish to print out events as they occur during the simulation

- Or record them and print them out later

- Do you wish to analyse the simulation events as the simulation proceeds

- Or analyse the events afterwards

- By recording them, or you could write a parser for the events

- You could write the simulator and events analyser as two completely separate programs, even in different languages

Parsing

- You do not need to start with the parser

- The parser produces some kind of data structure. You could instead start by hard coding your examples in your source code

- But the parsing for this project is pretty simple

- Hence I would start with the parser, even if I did not complete

the parser before moving on

- I find hard coding data structure instances laborious

- But doing so would ensure your simulator code is not heavily coupled with your parser code, if you decide that is important

Software Construction

- Software construction is relatively unique in the world of large projects in that it allows a great deal of back tracking

- Many other forms of projects, such as construction, event planning, and manufacturing, only allow for backtracking in the design phase.

- Because of this, traditional project planning advocates a large amount of up-front design

- When computer programming projects first started to grow beyond the remit of a single week, such techniques were applied

- We now know that often this is something of a waste of this unique ability to allow backtracking

Software Construction

- Another way to view this:

- Construction projects cannot afford to change the design once construction has begun

- Hence, the design phase consists of building the object virtually (on paper, on a computer) when backtracking is inexpensive

- Software projects do not produce physical artifacts, so the construction of the software is mostly the design

Refactoring

- Refactoring is the process of changing code such that it computes exactly the same function (of inputs to outputs), but has a better design.

- This is tremendously powerful, because it allows us to try out various designs, rather than guessing which one is the best

- It allows us to determine whether something is possible, without necessarily building it in the best way

- It allows us to design retrospectively once we know significant details about the problem at hand.

- It allows us to avoid the cost of full commitment to a particular solution which, ultimately, fails.

Suggested Strategy

- Note that this is merely a suggested strategy

- Start with the simplest program possible

- Incrementally add features based on the requirements

- After each feature is added, refactor your code

- This step is important, it helps to avoid the risk of developing an unmaintainable mess

- Additionally it should be done with the goal of making future feature implementations easier

- This step includes janitorial work (see below)

Suggested Strategy

- At each stage, you always have something that works

- Although you need not specifically design for later features you do at least know of them, and hence can avoid doing anything which will make those features particularly difficult.

Alternative Inferior Strategy

- Design the whole system before you start

- Work out all components and sub-components you will need

- Start with the sub-components which have no dependencies

- Complete each sub-component before moving on to the next

- Once you have developed all the dependencies of a component you can now choose that component to develop

- Finally, put everything together to obtain the entire system

- Test the entire system

Janitorial Work

- Wish to discuss two points:

- Real and Logical Time

- How to break a rule

- To do so I'll need the notion of janitorial work

- Examples of Janitorial

- Reformating

- Commenting

- Changing Names

- Tightening

Janitorial Work

Reformating

void method_name (int x)

{

return x + 10;

}

void method_name(int x) {

return x + 10;

}

Janitorial Work

Reformating

- Refomatting is entirely superficial

- It is important to consider when you apply this

- Reformatting can result in a large ‘diff’

- This may well conflict with other work performed concurrently

- Reformatting should be largely unnecessary, if you keep your code

formatting correctly in the first place

- More commonly required on group projects

Janitorial Work

Commenting

- I hope I needn't re-iterate the importance of writing good comments in your source code

- When done as janitorial work this can be particularly useful