For a given structural ambiguity, say PP attachment

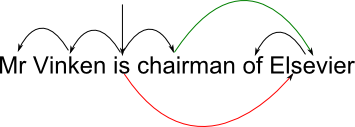

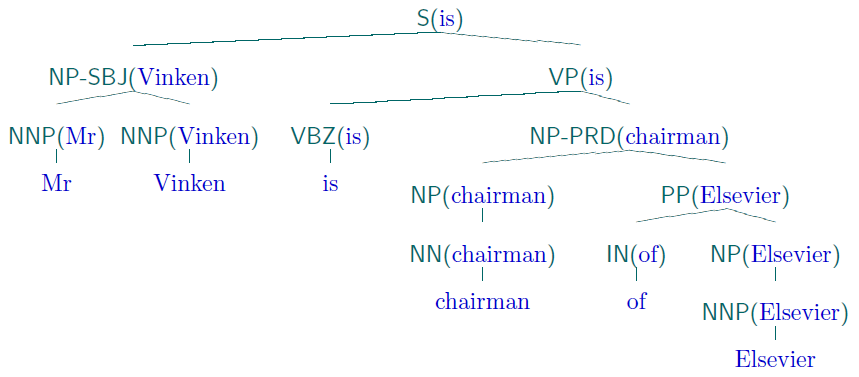

Consider the two alternative parses we would get from the Treebank grammar for Mr Vinken is chairman of Elsevier:

![[no description, sorry]](../15/ppfig2.png)

![[no description, sorry]](../15/ppfig1.png)

How did we get those two analyses?

![[no description, sorry]](../15/ppDeriv2.png)

So the only difference is the probabilities of the rules highlighted in red

And those will always give the same answer

We really need to pay attention to the probability of those words being tightly or loosely connected

Improving our approach to probabilistic grammar requires paying more attention to individual words

Back to bigrams, but in a little more detail

Here are the top 10 bigrams from Herman Melville's famous American novel Moby Dick:

Aside from the whale, these are all made up of very high-frequency closed-class items

The highest bigram of open-class items doesn't come until position 27: sperm whale, with frequency 182

None-the-less if feels as if there's something particularly interesting about that one. . .

"You shall know a word by the company it keeps" (J. R. Firth)

One of the things we evidently know about our language is what words go with what

Choosing the right word from among a set of synonyms is a common problem for second-language learners

Or consider the old (linguists') joke:

The name for an 'interesting' pair is collocation

How can we separate the interesting pairs from the dull ones?

We could try just throwing out the 'little words'

corpus.stopwords.word('english') in NLTKSome of these feel special (right whale, moby dick), but others (old man in particular) just seem ordinary

What we want is some way of factoring in frequency more generally

Conditional and joint probability are the answer

One way of getting at our intuition might be to say we're looking for cases where the two probabilities are not independent

Now the bigram frequency gives us an MLE of the joint probability directly

So the ratio of that probability, to what it would be if they were independent, would be illuminating:

Pointwise mutual information

Terminology note: Strictly speaking we should distinguish between pointwise mutual information and mutual information as such. The latter is a measure over distributions, as opposed to individuals.

Let's compare the most frequent bigram (of the) with the first interesting one we saw, sperm whale

>>> f[('of', 'the')]

1879

>>> u['of']

6609

>>> u['the']

14431

>>> 1879.0/218360

0.0086050558710386513

>>> (6609.0 * 14431)/(218361*218361)

0.0020002396390988637

>>> log((1879.0/218360)/((6609.0 * 14431)/(218361*218361)),2)

2.105011706733956

>>> f[('sperm','whale')]

182

>>> u['sperm']

244

>>> u['whale']

1226

>>> 182.0/218361

0.00083348216943501818

>>> (244.0*1226)/(218361*218361)

6.2737924534150313e-06

>>> log((182.0/218361)/((244.0*1226)/(218361*218361)),2)

7.0536697225202696Simply put, the mutual information between sperm and whale is 5 binary orders of magnitude greater than that between of and the

Why are we using log base 2?

We can use the same approach to build a translation lexicon

Instead of bigrams within a single text

[((u'commission', u'commission'), 113),

((u'rapport', u'report'), 84),

((u'régions', u'regions'), 71),

((u'parlement', u'parliament'), 66),

((u'politique', u'policy'), 62),

((u'voudrais', u'like'), 58),

((u'président', u'president'), 57),

((u'fonds', u'funds'), 52),

((u'monsieur', u'president'), 50),

((u'union', u'union'), 48),

((u'états', u'states'), 46),

((u'membres', u'member'), 46),

((u'états', u'member'), 46),

((u'développement', u'development'), 44),

((u'membres', u'states'), 43),

((u'également', u'also'), 42),

((u'structurels', u'structural'), 41),

((u'fonds', u'structural'), 41),

((u'structurels', u'funds'), 40),

((u'cohésion', u'cohesion'), 38),

((u'voudrais', u'would'), 38),

((u'européenne', u'european'), 37),

((u'orientations', u'guidelines'), 37),

((u'commission', u'would'), 36),

((u'madame', u'president'), 34),

((u'groupe', u'group'), 33),

((u'commissaire', u'commissioner'), 33),

((u'présidente', u'president'), 32),

((u'sécurité', u'safety'), 32),

((u'transports', u'transport'), 30)]

Pretty good

And would be better if we had done monolingual collocation detection first!

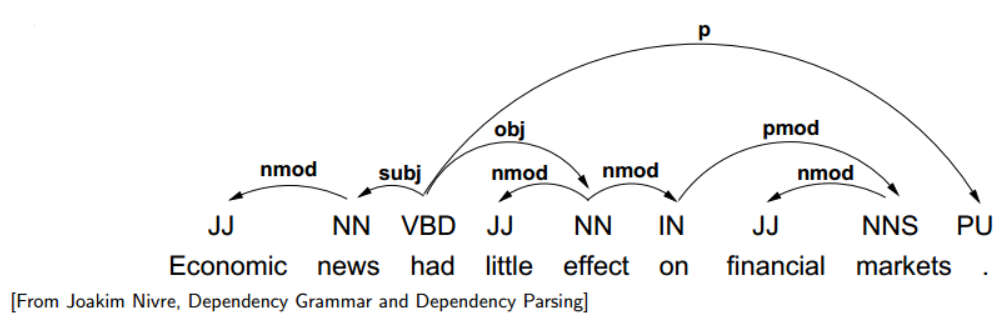

We mentioned the idea of the head of a constituent in earlier lectures

Approaches to grammar which focus on heads are called dependency grammars

The standard form of diagram shows where this name comes from:

(Green for the preferred attachment, red for the less likely one)

Dependency grammars don't have rules in the way that a CFG does

A given approach to dependency grammar will also involve an inventory of relations

Dependency graphs are not required to avoid crossings

It's possible to add some of the benefits of dependency grammar to PCFGs

We can't do statistics directly on these augmented categories

But a range of techniques have been developed in the last few years to work around this

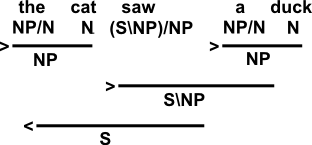

Categorial grammar represents a different approach to putting words at the centre of things

In the simplest form of CG, all we need is a lexicon like this

N: duck, cat, ...

NP/N: the, a, ...

S\NP: ran, slept, ...

(S\NP)/NP: saw, likedWhere we read e.g. NP/N as the category for things which

combine with an N to their right to produce an NP

S\NP as the category for things which combine with an

NP to their left to produce an SIn the obvious way this gives us the following derivation for the cat saw a duck:

The arrows next to the derivation steps identify which of the (meta-)rules was used for that step:

X/Y Y → XY X\Y → XThese are the only two rules, or rule schemata, needed for the simplest categorial grammars