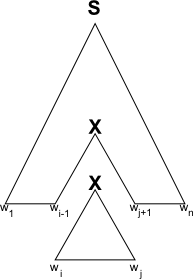

Before we look more closely at some of the in-principle problems with massive PCFGs

We'll look at some practical difficulties

Multiple tags per terminal (word)

Means 100s of thousands of edges in a probabilistic chart parser

If we're working with spoken language, the numbers are even worse

Finding all the parse trees, so that you are sure to find the best, is often therefore out of the question

More recent experiments

So although what I said yesterday is true in principle

Why is this?

. . . produces small numbers quickly

So short analyses are almost always are more probable than long ones

Consider the trivial case of the three word phrase "the men "

And here are the costs (that is )

It turns out that even though analysing 'the' as DT is 500 times more likely than analysing it as JJ

Here are the two key subtrees, with their accumulated costs:

![tree for [NP-SBJ [JJ the][NNS men]] with cost 10.19 + 9.24 + 6.58 = 26.01 tree for [NP-SBJ [JJ the][NNS men]] with cost 10.19 + 9.24 + 6.58 = 26.01](../14/tree1.png)

![tree for [S [NP-SBJ [DT the][NNS men]][VP [VBD came]]] with cost 33.25, NP-SBJ cost 16.14 tree for [S [NP-SBJ [DT the][NNS men]][VP [VBD came]]] with cost 33.25, NP-SBJ cost 16.14](../14/tree2.png)

Even though the 'wrong' NP-SBJ has much higher cost that the 'right' one

What we've been using to order the agenda is called the inside probability

Using the inner probability to sort the agenda will clearly prefer smaller trees

The name for what we're looking for is a figure of merit

There are lots of possibilities

This would clearly have the desired effect in our worked example above

16.14 to 8.07Note that normalising in the cost domain uses the arithmetic mean

In the probability domain, we use the geometric mean

Using the left half of the outer cost as well improves performance further

See Caraballo and Charniak 1996 for the details

Even with a good figure of merit, our chart will still grow very large

So standard practice is to prune the agenda

The result is called beam search

Whenever the agenda is full

There are two possibilities (ignoring ties)