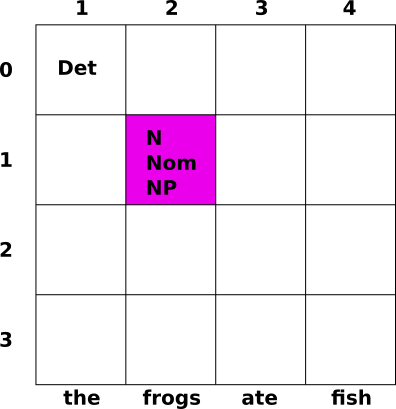

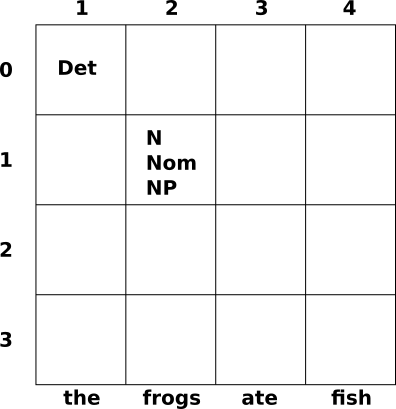

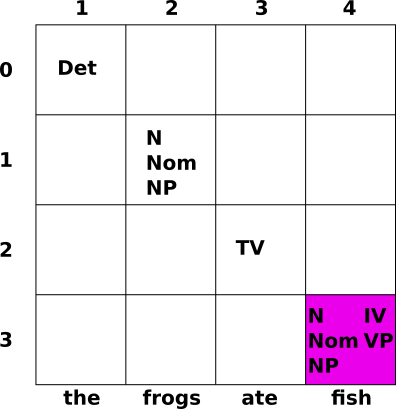

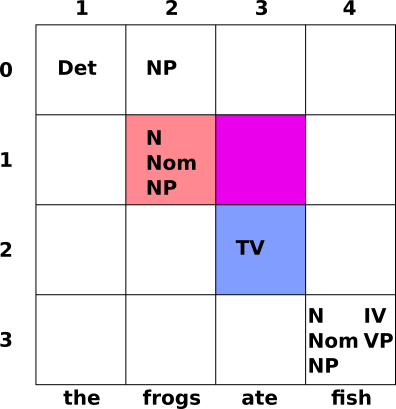

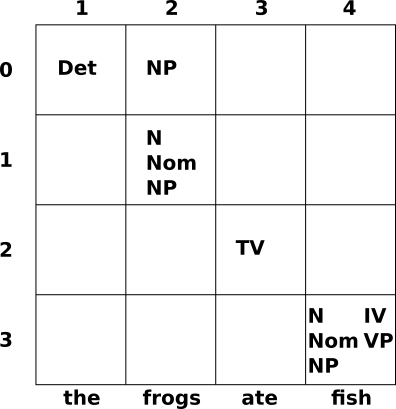

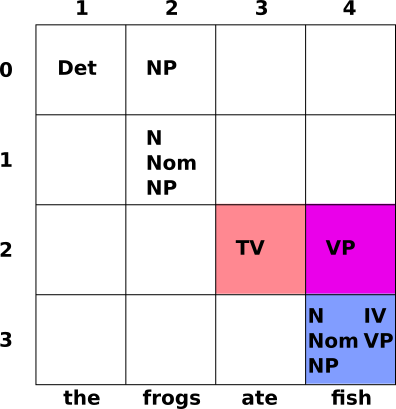

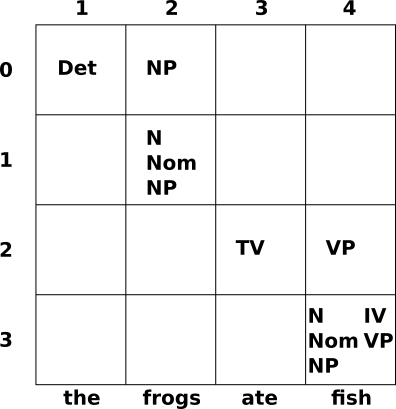

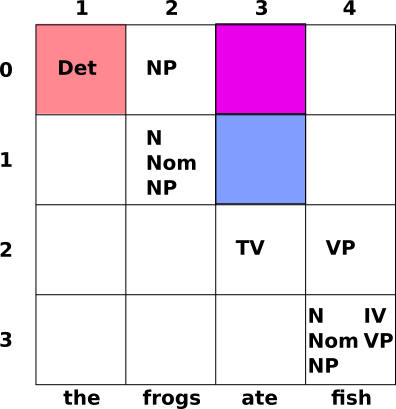

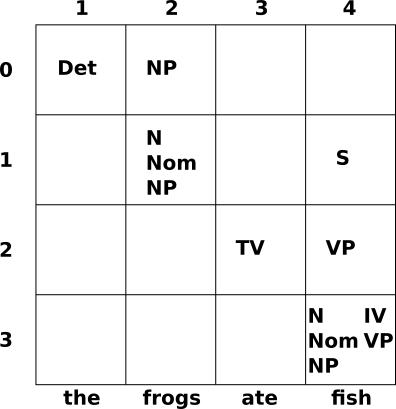

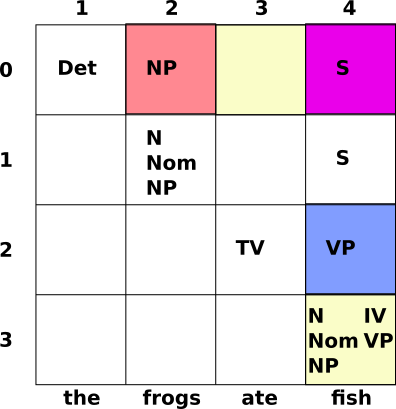

Just the empty matrix

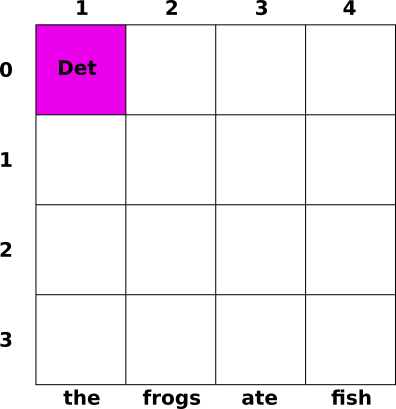

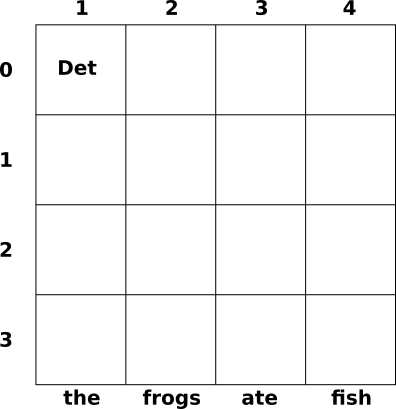

Unary branching rules: Det → the

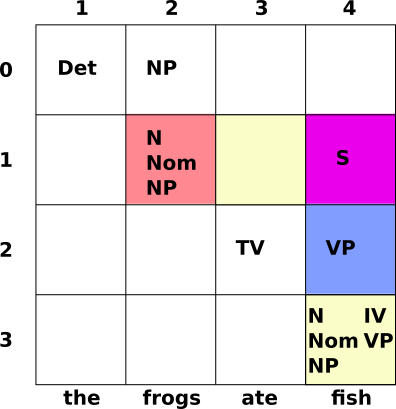

Unary branching rules: N → frogs, Nom → N, NP → Nom

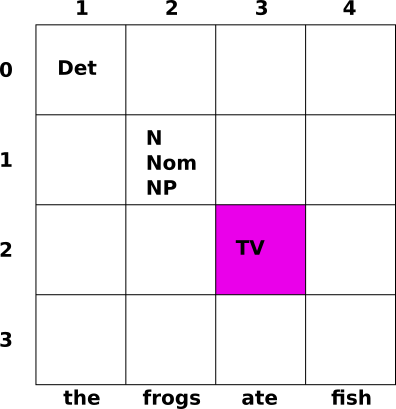

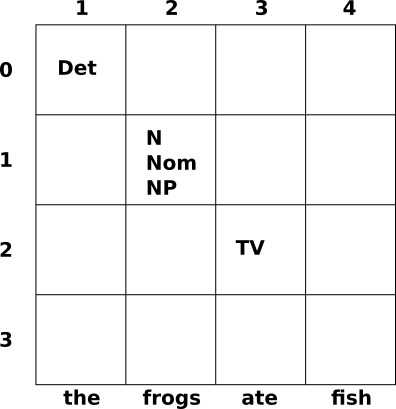

Unary branching rules: TV → ate

Unary branching rules: N → fish, Nom → N, NP → Nom, IV → fish, VP → IV

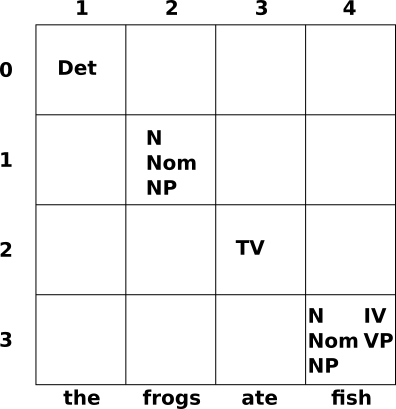

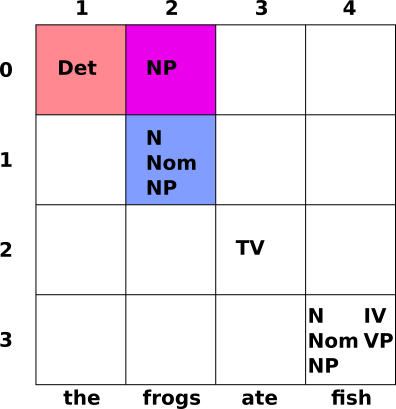

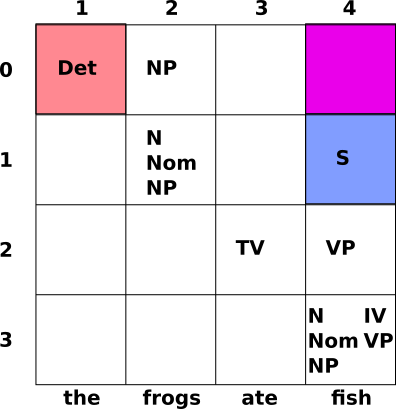

Binary branching rule: NP → Det Nom

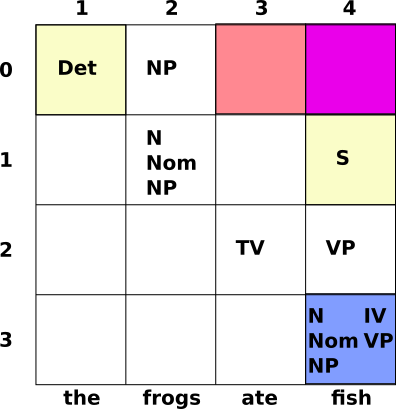

Binary branching rule: VP → TV NP

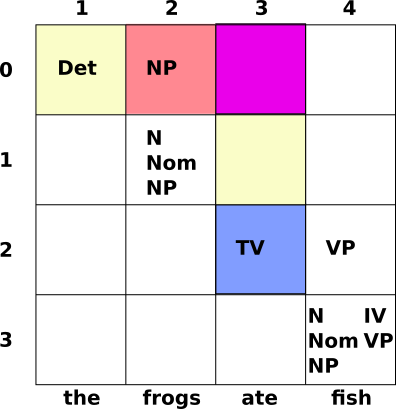

(0,1) & (1,3) ¬⇒

(0,2) & (2,3) ¬⇒

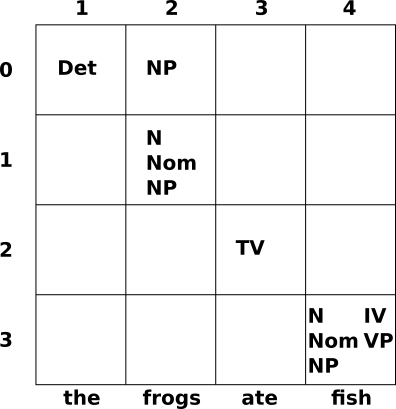

Binary branching rule: S → NP VP

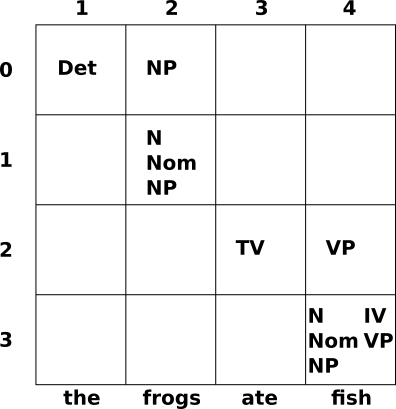

(1,3) & (3,4) ¬⇒

(1,2) & (2,4) ⇒ (1,4)

Binary branching rule: S → NP VP

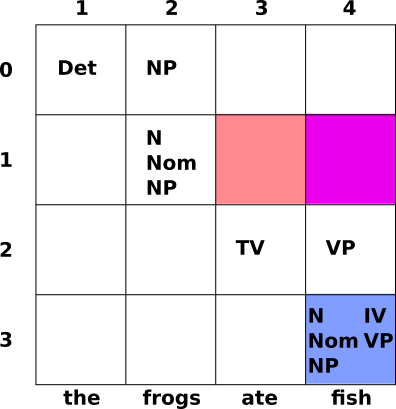

(0,1) & (1,4) ¬⇒

(0,3) & (3,4) ¬⇒

(0,2) & (2,4) ⇒ (0,4)

We cannot tell from the CKY chart as specificed, the syntactic analysis of the input string.

We just have a chart recogniser, a way of determining whether a string belongs to the language generated by the grammar.

Changing this to a parser requires recording which existing constituents were combined to make each new constituent. This requires another field to record the one or more ways in which a constituent spanning (i,j) can be made from constituents spanning (i,k) and (k,j).

Features help express the grammar neatly, but they don't change the ambiguity problem

Active chart parsing gives us flexibility, but that doesn't solve the ambiguity problem

Examples of ambiguity:

“One morning I shot an elephant in my pyjamas ...” (Groucho Marx)

We saw penguins flying over Antarctica

hot tea and coffee

empty bottles and fag-ends

The significance of attachment ambiguity is clear when we look at semantics

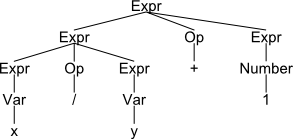

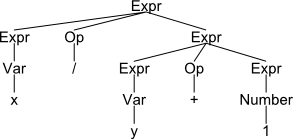

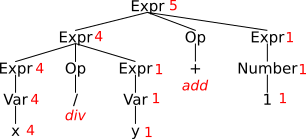

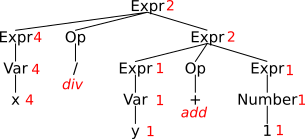

Back to Python arithmetic expressions, with an even simpler grammar:

Expr → Expr Op Expr | Var | Number

Op → '+' | '-' | '*' | '/'Assuming some more work to tokenise the input, this will

give us two analyses for the string "x/y+1":

x is 4 and y

is 1

We see that the difference in attachment makes a very real difference

We call that kind of semantic computation a compositional one

"The meaning of the whole [constituent] is a function of the meaning of the parts [children]"

How does this relate to natural language?

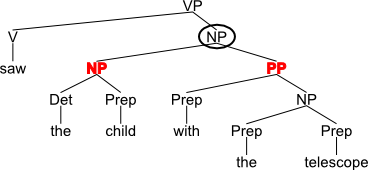

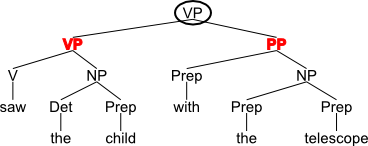

So, for example, for the kind of PP attachment ambiguity we keep encountering

In other words, with respect to "saw the child with the telescope"

We need a way to choose the best analysis from among many

And we need a sound basis for ranking these

A gold standard provides at least partial solutions. . .

Mitch Marcus at the Univ. of Pennsylvania took this seriously

The Penn Treebank project was launched in 1989

The first release contained 'skeletal' parses, that is, simple syntactic trees

Here's an example:

(S

(NP

(ADJP Battle-tested Japanese industrial)

managers)

here always

(VP buck up

(NP nervous newcomers)

(PP with

(NP the tale

(PP of

(NP the

(ADJP first

(PP of

(NP their countrymen)))

(S (NP *)

to

(VP visit

(NP Mexico)))))))))This annotation was produced by manually correcting the output of a simple chunking parser, then removing the POS-tags and some NP-internal structure

The second release added much more information, including some hyphenated functional indications:

(S (NP-SBJ (NP Battle-tested Japanese industrial managers)

(ADVP-LOC here))

(ADVP-TMP always)

(VP buck

(PRT up)

(NP nervous newcomers)

(PP-CLR with

(NP (NP the tale)

(PP of

(NP (NP the

first)

(PP of

(NP their countrymen))

(SBAR (WHNP-1 0)

(S (NP-SBJ *T*-1)

(VP to

(VP visit

(NP Mexico)))))))))))The functional markers here are

Trivial, really

For every node in every tree with non-lexical children

So, for the tree in the previous slide, we'd get e.g.

The result is guaranteed to give at least one parse to every sentence in the Treebank

Given a treebank, we can easily compute a simple kind of probabilistic grammar

For a treebank-derived ruleset, the maximum likelihood probability estimate is simple:

So, for example, given that in the NLTK treebank subset, there are 52041 subtrees labelled S

And in 29201 of these there are exactly two children of S, labelled NP-SBJ followed by VP, we get

The probability of a whole parse tree is then just the product of the probabilities of every non-terminal node in that tree

It's not hard to modify a chart parser to use probabilities to actually guide parsing

No-one's grammatical theory/detailed grammar is going to give exactly the same results as a treebank

So how do you evaluate a parser against a treebank?

It turns out just looking at major constituent boundaries is surprisingly good

The same (D)ARPA push towards evaluation-driven funding drove the development of the PARSEVAL metric

PARSEVAL just compares bracketings, without regard to labels

Each of these is effectively penalising an attachment mistake

(a child with a telescope)

will be penalised wrt

(a child (with a telescope))

((hot tea) and coffee)

will be penalised wrt

(hot (tea and coffee))

The bad news: just using the treebank subset that ships with NLTK, there are 1798 different expansions for S

As always, there's a tradeoff between specificity and statistical significance

For getting good PARSEVAL scores