The first three weeks have introduced the concept of a language model

Along with a number of other key technologies and methodologies:

These all find a place in systems we can understand in terms of the noisy channel model

Where we're headed next moves away, at least temporarily, from that narrative

The present state of NLP can be understood best by reference to the history of the computational encounter with natural language

First closely parallel to, latterly increasingly separated from, the history of linguistic theory since 1960

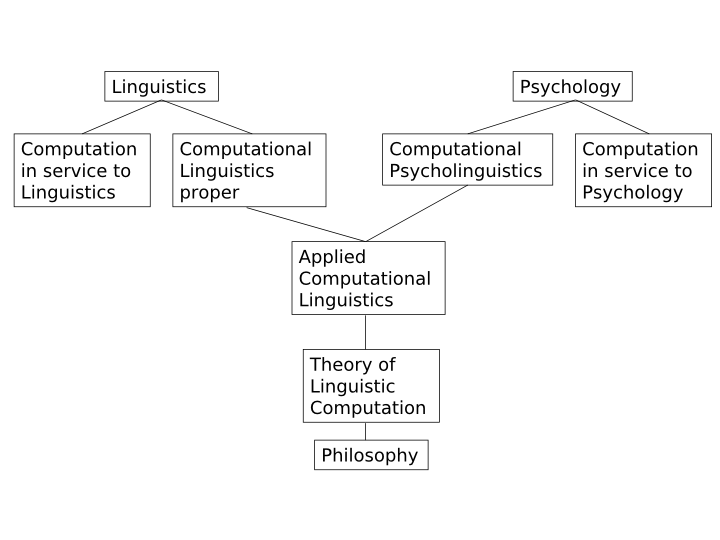

Situated in relation to the complex interactions between linguistics, psychology and computer science:

Originally all the computational strands except the 'in service to' ones were completely invested in the Chomskian rationalist perspective

Interdisciplinary work runs the risk of mistaking its hyphen for a license to make up the rules

But this just results in a loss of credibility

So actually much more so than unhyphenated practioners

Computational linguistics initially drew heavily on 20th-century linguistics

So drew extensively on algebra, logic and set theory:

Then added parsing and 'reasoning' algorithms to grammars and logical models

Starting in the late 1970s, in the research community centred around the (D)ARPA-funded Speech Understanding Research effort, with its emphasis on evaluation and measurable progress, things began to change

(D)ARPA funding significantly expanded the amount of digitised and transcribed speech data available to the research community

Instead of systems whose architecture and vocabulary were based on linguistic theory (in this case acoustic phonetics), new approaches based on statistical modelling and Bayesian probability emerged and quickly spread

Every time I fire a linguist my system's performance improves

Fred Jellinek, head of speech recognition at IBM, c. 1980 (allegedly)

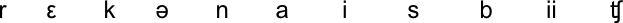

The speech signal under-determines what we 'hear'

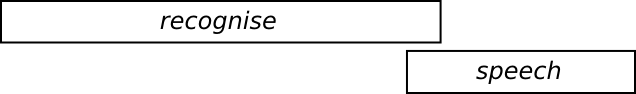

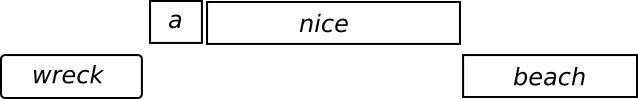

You heard:

But I said:

And there are more possibilities:

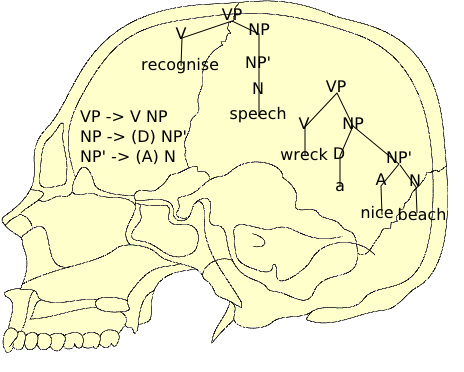

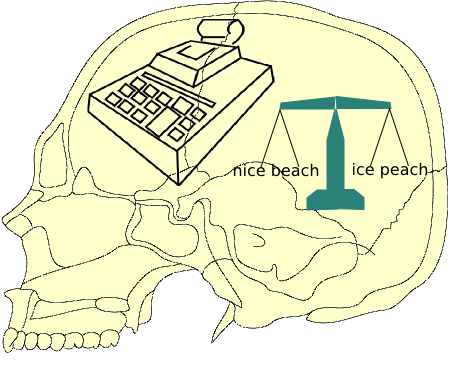

So how do we select the right path through the word lattice?

Is it on the basis of a small number of powerful things, like grammar rules and mappings from syntax trees to semantics?

Or a large number of very simple things, like word and bigram frequencies?

In practice, the probability-based approach performs much better than the rule-based approach

The publication of 6 years of digital originals of the Wall Street Journal in 1991 provided the basis for moving the Bayesian approach up the speech chain to morphology and syntax

Many other corpora have followed, not just for American English

And the Web itself now provides another huge jump in the scale of resources available

To the point where even semantics is at least to some extent on the probabilistic empiricist agenda

Whereas in the 1970s and 1980s there was real energy and optimism at the interface between computational and theoretical linguistics

While still using some of the terminology of linguistic theory

Within cognitive psychology, significant energy began going in to erecting a theoretical stance consistent with at least some of the new empiricist perspective

But the criticism voiced 35 years ago by Herb Clark, who described cognitive psychology as "a methodology in search of a theory", remains pretty accurate

And within computer science in general, and Artificial Intelligence in particular, the interest in "probably nearly correct" solutions, as opposed to contructively true ones, is dominant

Even philosophy of mind has begun to explore a Bayesian perspective

Some aspects of the rationalist programme have been making a bit of a comeback

One reason for this is very concrete:

So noisy channel architecture systems will struggle to handle most of the world's languages

There's another area where data scarcity is relevant too

Parse trees, that is

They're a means to at least three distinct ends:

Two related perspectives:

Building on, and extending, formal language theory, Chomsky (re)defined the scientific study of language

Warning! His rationalist rhetoric ("what do people know about their language") and his technical vocabulary ("generative grammar") encourages a misunderstanding

But his actual goal is to characterise what people know about (their) language

Generative grammar is 'generative' because it defines a language as the set of strings generated by a specified formal procedure

Chomsky identified four classes of (formal) languages

Each mechanism and grammar type is capable of generating any language of its type, or a higher type

The current pretty broad consensus is that natural languages are somewhere between type 2 and type 1

The grammar types in the Chomsky hierarchy can all expressed as constraints on a formalisation of the idea of rewriting systems

A rewriting system consists of

Regular and Context-free grammars (CFGs) restrict the left-hand side of rules to a single non-terminal symbol

Regular grammars further restrict the right-hand side to be a pair of a non-terminal and a terminal, or a single terminal

For example, the following (right-linear) grammar defines the language a*b+:

S → aS

S → B

B → bB

B → bThis should look familiar from lecture 2:

And the following context-free grammar defines anbn:

S → a S b

S → Note the common convention that non-terminals start with upper-case letters, terminal with lower-case

What is the language defined by a mechanism?

For FSAs, a string is in the language defined by a finite-state automaton F iff there is a non-empty sequence s* of states of F such that

There are two (main) ways to interpret a grammar as defining a language

A string is in the language defined by a context-free grammar G iff

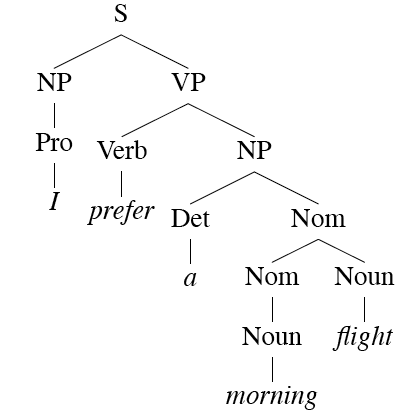

Under either interpretation, we can use a parse tree (strictly speaking an ordered tree) to illustrate the way in which a string belongs to the language defined by a CFG

For example, using the grammar and lexicon for the ATIS domain given in section 10.2 of Jurafsky & Martin (3rd edition)

I prefer a morning flight

The parse tree that 'proves' that this is in the language is

As with FSAs and FSTs (see Lectures 2,3), you can view rewrite rules as either analysis or synthesis machines

Compilers and interpreters for programming languages use parse trees to implement a compositional semantics

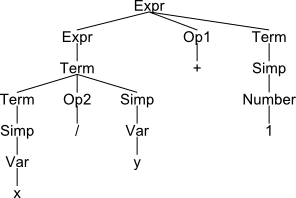

Consider a simple grammar for Python arithmetic expressions:

Expr → Expr Op1 Term | Term

Term → Term Op2 Simp | Simp

Simp → Var | Number | '(' Expr ')'

Op1 → '+' | '-'

Op2 → '*' | '/'Assuming some more work to tokenise the input, this will

give us an analysis for the string "x/y+1"

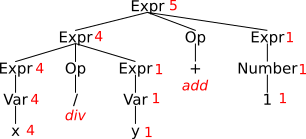

We can compute a value for this expression using the tree

x is 4 and y

is 1

We call that kind of semantic computation a compositional one

"The meaning of the whole [constituent] is a function of the meaning of the parts [children]"

Logic-based approaches to natural language semantics often adopt this approach as well

In a number of different areas

attempts are being made to generalise

By abstracting linguistic structures (trees and/or dependency graphs) to a sufficient extent

We won't get to this in this course.